Satellite drag and self-correcting language models: Khoury undergrads take NeurIPS conference

Author: Madelaine Millar

Date: 03.11.24

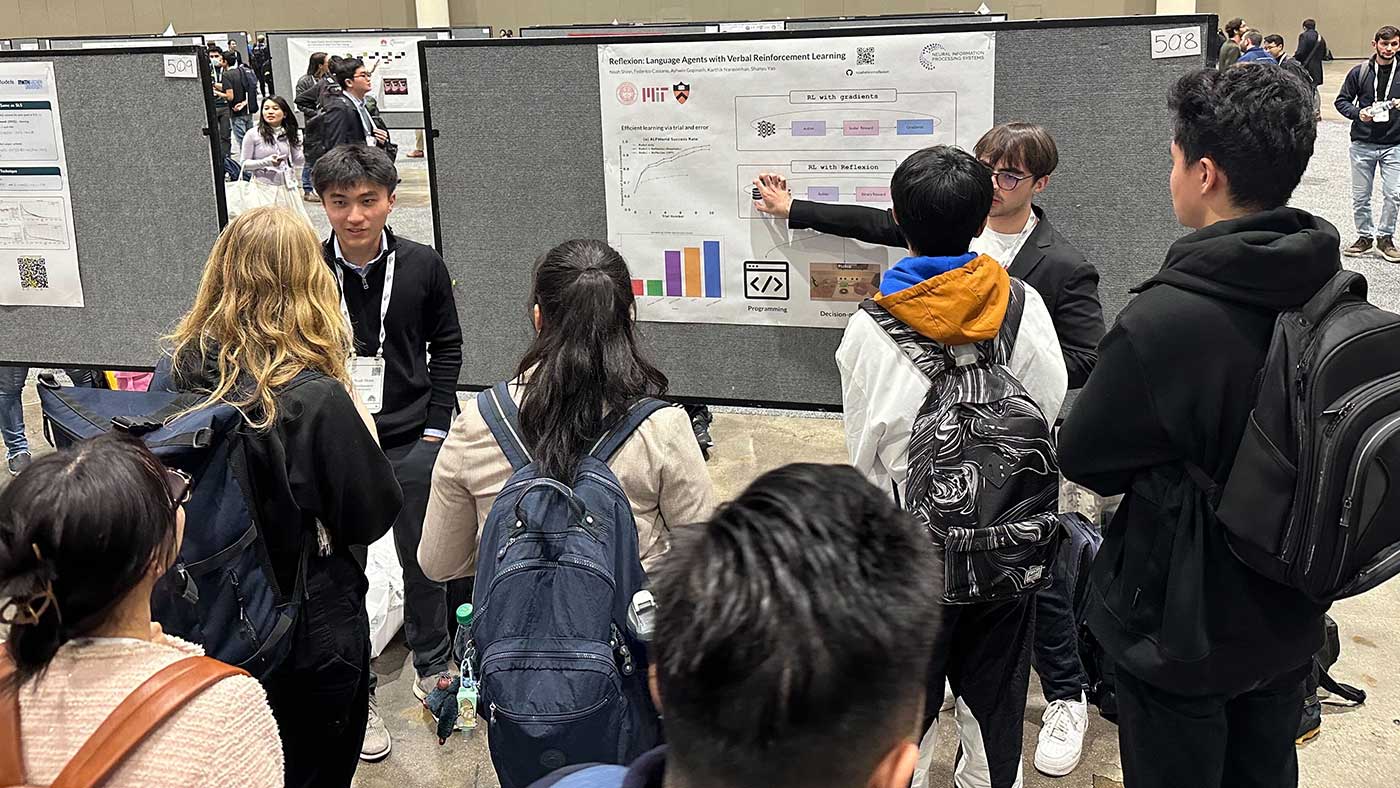

Khoury undergrads Noah Shinn (left) and Federico Cassano (right), with Princeton doctoral student Shunyu Yao.

The Neural Information Processing Systems (NeurIPS) Conference is one of the most selective and respected machine learning conferences in the world. As such, undergraduate acceptances to December’s New Orleans-based gathering — which covered topics ranging from machine learning and neuroscience to computer vision, statistical linguistics, and information theory — were uncommon, and a high honor.

So imagine their pride and surprise when Khoury College undergraduates Federico Cassano, Noah Shinn, and Neel Sortur learned they’d be among this top conference’s attendees.

In the main conference, Shinn and Cassano presented a poster about their large language model verification technique, which improves on the accuracy of the previous state-of-the-art technique by 11 percent. Sortur participated in a workshop on symmetry and geometry, one that aligned with his own paper in which he used neural networks to model satellite drag. Both students expressed gratitude for Khoury College’s assistance in helping them to attend the conference, which Cassano described as an exciting opportunity to share his art.

“Khoury College is happy to provide funding support to help defray conference attendance costs,” said Jessica Biron, senior director of undergraduate programs at Khoury College. “Undergraduate students presenting at such a prestigious conference is a huge personal achievement, and we are very proud of our students!”

Training large language models to correct their own mistakes

As large language models (LLMs) develop, it’s becoming clear that there are things they are really good at — such as generating a block of text or code, or spotting and correcting errors in an existing block — and things they aren’t so good at, like generating a truthful and accurate block the first time around. But is it possible to use the tech’s strengths to address its shortcomings?

That’s the idea behind Reflexion, a framework developed by Khoury undergraduates Noah Shinn and Federico Cassano, with support from MIT professor Ashwin Gopinath, Princeton University professor Karthik Narasimhan, and Princeton doctoral student Shunyu Yao.

When an LLM spits out a solution, Reflexion automatically feeds that solution into an evaluator tool and reflects on its outputs to correct any mistakes. By simply asking the model to automatically verify its work one time, Shinn and Cassano achieved 91% accuracy on the HumanEval coding benchmark, an 11% increase over the previously state-of-the-art GPT-4 model. In addition to code generation, the process can be applied to reasoning and decision-making tasks too. The team’s paper has already been cited around 200 times, and their verification technique has been used by large AI labs like Google’s DeepMind.

”It turns out that using the model to generate a verification step is much more accurate than using the model to generate the data,” said Cassano, second author on the paper and a third-year combined cybersecurity and economics major.

Cassano enjoyed that the NeurIPS poster session let him face questions and pushback about Reflexion from experts in the field, which helped him to brainstorm ways to further develop the framework. Up next: making the process faster and cheaper by building the self-correcting step into the LLM architecture itself, instead of treating it as an add-on process.

Cassano and Shinn present their work at the NeurIPs poster session.

Beyond the college’s financial support for the conference trip, Cassano credited his classes with inspiring him to pursue this line of research in the first place. Specifically, his “Artificial Intelligence” class with professor Steven Holtzen had a huge impact.

“Initially, I didn’t realize my interest in AI; I was always fascinated by symbolic reasoning,” Cassano said. “In the class, I discovered that AI could integrate with symbolic reasoning, blending two areas I am passionate about. That truly captivated my interest in AI.”

How to stop satellites from crashing into each other

There are more than 8,000 satellites orbiting earth, each one whipping around the planet at thousands of miles per hour. To avoid collisions, it’s important to know where they’re all going, and fourth-year computer science major Neel Sortur has found a way to use deep learning to help.

With support from Khoury doctoral student Linfeng Zhao, professor Robin Walters, and the Geometric Learning Lab, Sortur developed neural network models to predict the drag force that acts on symmetrical satellites orbiting the earth. While it’s possible to compute the satellites’ future trajectories and prevent disasters using more traditional methods, doing so is prohibitively expensive, and fairly uncertain when making long-term predictions. Sortur’s machine learning methods help put safety within reach.

Neel Sortur

Avoiding such collisions is vital because of a phenomenon called the Kessler Syndrome. If even a couple of satellites collide, the debris could cause a cascade that damages a huge number of satellites. If enough damage occurs, it could create a debris field around Earth that would make it difficult or impossible to launch new orbiters into space.

“GPS would stop working; radar for a lot of Earth imaging, for detecting climate change, would stop working,” Sortur said. “If you can accurately model the drag coefficient and track where satellites will be, you can stop this at the source.”

This was Sortur’s maiden first-author paper, and he made use of many Northeastern resources to take his project from ideation to presentation. Reflecting back, he suggests that other students interested in research make use of the Office of Undergraduate Research and Fellowships, and that they reach out to doctoral students already researching in their areas of interest. He also specifically thanked Walters, his supervisor and the paper’s third author.

“He’s super supportive; I think it’s great that he was willing to chat so much during the project,” Sortur said. “We met every week.”

Although Sortur was nervous going into the NeurIPS Workshop on Symmetry and Geometry in Neural Representations, he found the conference incredibly rewarding.

“Everyone is thinking at such a high level. There’s a certain bar that everyone is at, so you could have really, really intellectual conversations,” Sortur said. “The professors I looked up to and many influential researchers were willing to talk to everyone … I didn’t feel like an undergrad student for a bit. People just wanted to know about your research and your methods, and they didn’t care what your background was. It felt really good to be in that environment.”

Subscribe to Khoury News

The Khoury Network: Be in the know

Subscribe now to our monthly newsletter for the latest stories and achievements of our students and faculty