Professor Ehsan Elhamifar’s DARPA award focuses on AI and augmented reality

Professor Ehsan Elhamifar’s DARPA award focuses on AI and augmented reality

Author: Tracy Geary

Date: 01.13.22

Imagine a world where you can rely on an adaptive and interactive virtual task assistant to help you do everything from trying a new recipe to fixing a broken water heater.

Thanks to Ehsan Elhamifar, assistant professor in the Khoury College of Computer Sciences, Northeastern University and director of the Mathematical Data Science (MCADS) Lab, that world is one step closer to becoming a reality.

Ehsan Elhamifar.

Ehsan Elhamifar.

He explained the specific focus of his work in this area. “Some of the research in my lab addresses automatic understanding of such procedural videos and learning from them using structured visual summarization methods and techniques. Summarization specifically reduces each video to a small number of representative frames, and in our case, those representatives must be from task steps, which are common across videos,” Elhamifar said.

DARPA, an agency within the U.S. Department of Defense (DoD), is responsible for catalyzing the development of technologies that maintain and advance the capabilities of the U.S. military. The grant, a multi-million dollar and multi-university effort, is being led by Elhamifar at Northeastern’s Khoury College in collaboration with Misha Sra at UC Santa Barbara and Minh Nguyen at Stony Brook University.

Being awarded this grant was “a big moment for me and for the other schools,” Elhamifar said.

Developing the technology for AI task assistants in two domains

“Our project is part of the Perceptual Task Guidance (PTG) program recently announced by DARPA,” Elhamifar explains. The three universities “bring together different skills to the project, covering visual perception, visual reasoning, user modeling, and augmented reality. We will be pushing the boundaries of deep learning for perception and attention, automated reasoning, and augmented reality to achieve the goals of this project.”

“Each school will bring their expertise,” Elhamifar continued. “At the end of the day, there will be integration and collaboration.”

As Elhamifar explains, DARPA is interested in two domains: one of medical assistance and the other of pilots. “Military personnel need to perform an increasing number of more complex tasks. For example, medics must perform various procedures over extended periods of time, and mechanics need to repair increasingly sophisticated machines.”

The goal of the project is to develop methods and technology for AI assistants that provide timely visual and audio feedback to users to help in performing physical tasks.

Elhamifar described the intended user situation: “The user wears cameras and a microphone, which allow our system to see and hear what the user sees and hears, and an augmented reality headset through which our system provides timely and adaptive feedback, guiding users of all skill levels through familiar and unfamiliar tasks.” What makes the technology helpful? He continued, “The system will provide instructions. For example, the system can suggest remedial actions when the user has made a mistake or is about to do so. Or when the user is faced with a completely new task, the system provides step-by-step visual and audio instructions based on the user’s skills and cognitive style.”

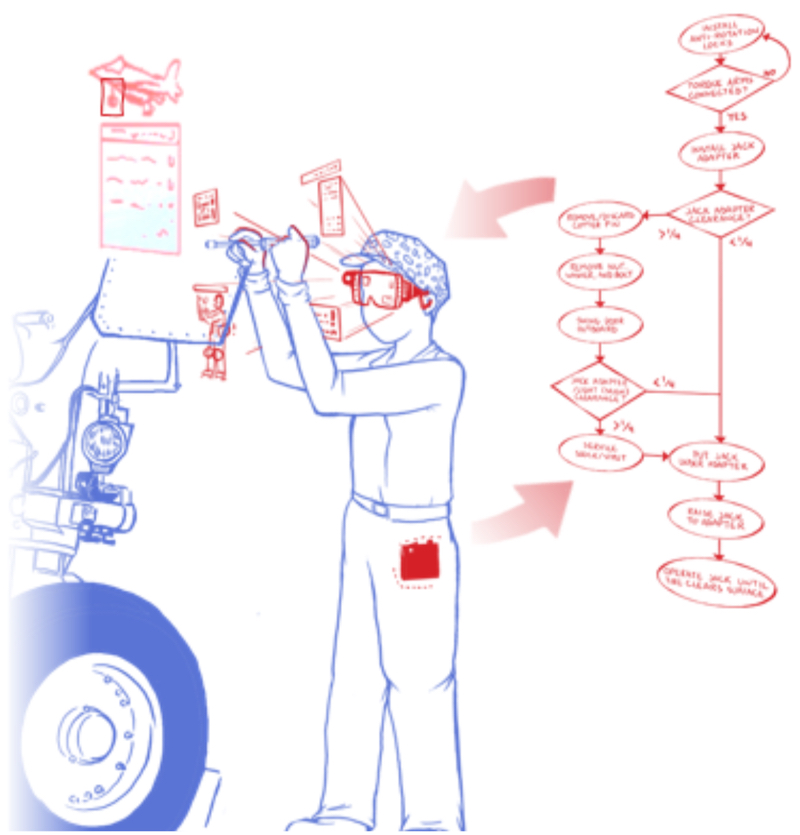

Image: Perceptually-enabled Task Guidance (PTG), Broad Agency Announcement HR001121S0015, DARPA (March 2021)

Image: Perceptually-enabled Task Guidance (PTG), Broad Agency Announcement HR001121S0015, DARPA (March 2021)

The research team calls their new technology AI2ART Assistant, which stands for adaptive and interactive AI-AR task assistant, as the system integrates AI with augmented reality and adapts to the user’s skill-level, attention, and cognitive style.

Several unique challenges are associated with the project. For example, being able to recognize learning models of tasks with high accuracy and in real-time from a tiny number of training videos and resources is a major undertaking. Another challenge is for the system to understand the user’s cognitive style and attention/emotion state during task execution and integrate them into their prediction models.

Elhamifar pointed out that since different types of learners will be using this product, “we must take into account the style of the user and the different skill levels.”

Assistive technologies potentially helpful to regular users, too

To address the many challenges they face while meeting the research goals, Elhamifar explained, “We will push the boundaries of science and engineering in this project to address these challenges to develop assistive technologies that in real-time understand and evaluate tasks and subtasks performed by the user and provide just-in-time feedback according to the user’s style and state.”

Elhamifar asserted that, while the system is being built for DARPA and will be extremely helpful to pilots and soldiers on missions, its ultimate use will not be just for the military, but for all people.

“We believe that our system would not only be useful to DARPA and the military, but also to regular users and people who want to perform new or complex tasks,” Elhamifar stated. “Imagine you want to cook a new recipe. Instead of searching on the internet, reading different instructions for the same recipe and watching YouTube videos, our system provides step-by-step audiovisual instructions and corrects and guides you as you work through making the recipe.”

Another example, said Elhamifar, is needing to repair a device at your home, or when your car part is malfunctioning. “You can wear our AI2ART assistant, which tells you what to do and how to do it.”

Third-year Khoury College doctoral students Zijia Lu and Yuhan Shen are part of the team on this DARPA project, working on new methods to address challenges related to understanding and analyzing egocentric videos of users in real-time. “They have developed efficient and high-performance methods for learning from procedural videos of complex activities and have tested our methods on real-world challenging datasets,” Elhamifar explained.

Elhamifar is no stranger to DARPA, having been awarded the prestigious DARPA Young Faculty Award (YFA) in 2018 for his research on structured summarization of very large and complex data. The award honors scholars who plan to focus a significant portion of their career on DoD and national security issues. Elhamifar pointed out that the line of research supported by the DARPA YFA program has resulted in “a solid foundation and a main building block for our AI2ART system.” The first-year goal of the planned four years of the project is to present to DARPA a demonstration model that will guide the user through a task. By the end of the fourth year, Elhamifar believes the system will be fully developed and able to guide users through multiple tasks that are time-and energy-efficient.

“DARPA is one of the leading agencies in developing and supporting cutting-edge technology and research,” Elhamifar noted. “We are excited to have this tremendous opportunity of working with them.”

Subscribe to the Khoury College newsletter

The Khoury Network: Be in the know

Subscribe now to our monthly newsletter for the latest stories and achievements of our students and faculty