Khoury theory researchers earn a pair of “best paper” honors

Author: Attrayee Chakraborty

Date: 08.28.23

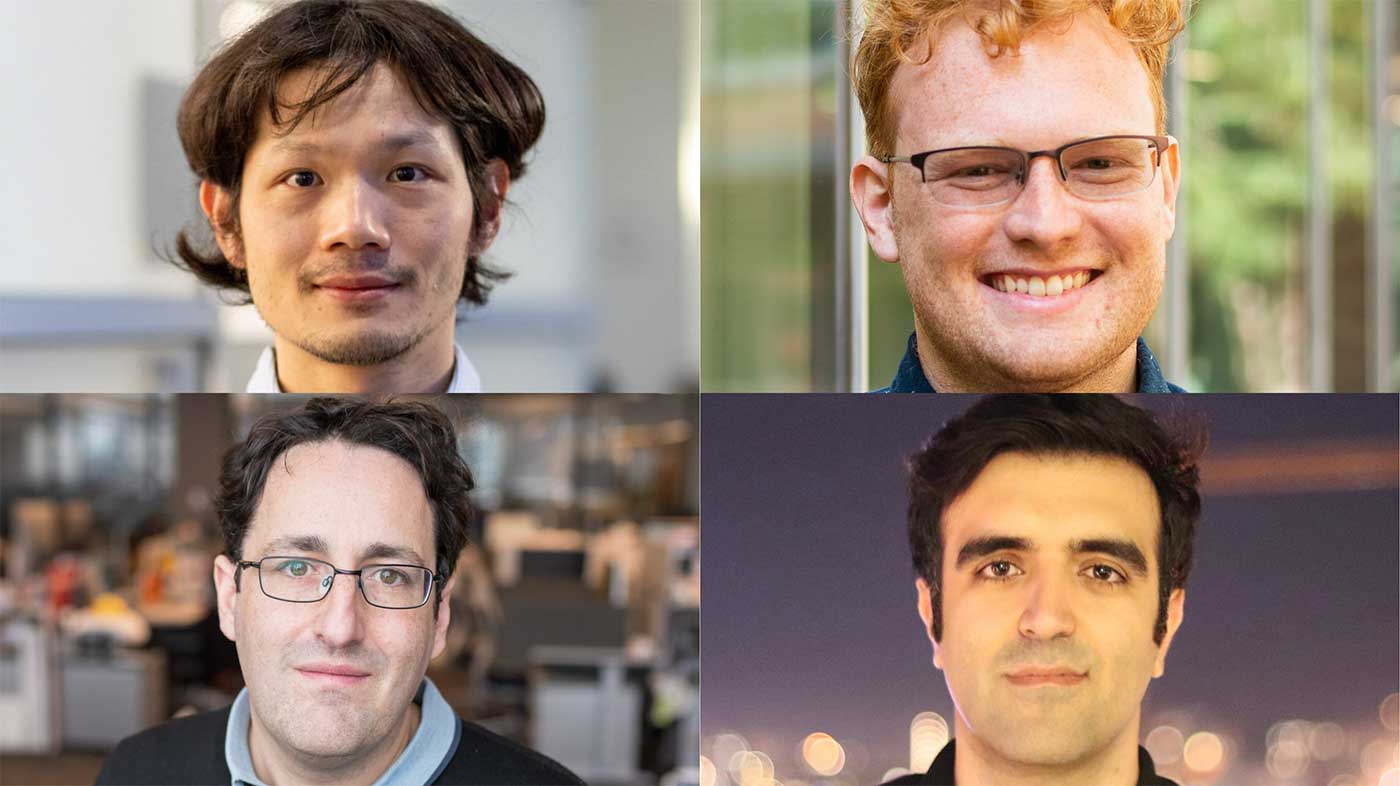

Clockwise from top left: Wei-Kai Lin, Ethan Mook, Soheil Behnezhad, and Daniel Wichs.

Clockwise from top left: Wei-Kai Lin, Ethan Mook, Soheil Behnezhad, and Daniel Wichs.

Within the past year, two Khoury College research papers have been recognized with prestigious awards for their contributions to theory-based research.

Former postdoctoral research associate Wei-Kai Lin, PhD student Ethan Mook, and Professor (and NTT Research Senior Scientist) Daniel Wichs won the Best Paper Award at the ACM Symposium on Theory of Computing (STOC). The team’s research focused on maintaining user privacy in search engines through fully homomorphic encryption, and discussed how the theory could be applied in practice.

Meanwhile, Professor Soheil Behnezhad won Best Paper at the ACM-SIAM Symposium on Discrete Algorithms (SODA), one of three flagship conferences in algorithms and theory. His research revolved around dynamic graph algorithms, optimizing processes for changing conditions, and large datasets.

Efficient and encrypted

Lin, Mook, and Wichs aimed to tackle a highly nuanced topic: would it be possible to search Google — or any search engine — without the engine learning anything about the user?

Mook says that it has been theoretically possible to do so using fully homomorphic encryption (FHE), which allows computation to be performed on data without the data itself being revealed. Their paper, titled “Doubly Efficient Private Information Retrieval (DEPIR) and Fully Homomorphic RAM Computation from Ring LWE,” brings this concept into reality.

“Think of it like putting your data into a locked box and performing operations on that data while it remains locked inside,” Mook explains. “I would give Google whatever it needs to do the search, but I’d protect my data without Google or any other third-party eavesdropping on it.”

However, Mook believes that standard FHE is unsatisfactory for the large databases that search engines work on. Instead, he and his team proposed a unique way to maintain user privacy while still delivering search results in seconds.

“Instead of using the circuit model of computation — in which processing speed depends on the size of the database — we focused on the random-access machine (RAM) model,” Mook says. “This way, the search engine could just read a single bit from the database without having to scan through the entire database, drastically reducing the time needed to receive the answer to a query while still maintaining user privacy.”

The biggest advantage of the RAM FHE model is that the server itself could save time by transforming the data into a format that is more easily processed (called “preprocessing”) instead of relying on a third party to perform the entire task — all without compromising encryption. The team based their work on prior research into private information retrieval and preprocessing polynomial computation, essentially simplifying equations to enable this faster processing.

“The server could preprocess parts of the cryptographic input, thereby reducing the time that the server needed to crunch the input message,” Mook says. “That formed the basis of DEPIR; we want the server to be ‘doubly efficient’ to show the result without going through the whole database.”

But before RAM FHE can deliver these advantages, some aspects of implementation still need to be squared away.

“Along with the cost of implementing the model, Google may not be that open to implementing the idea due to the large amount of information that users disclose through search queries,” Mook mentions. “Additionally, our results are mostly theoretical, so practically implementing such ideas may involve a few hiccups, such as the actual time that it would take, and the need to explore different assumptions on which DEPIR can be based.”

But in the meantime, the team will enjoy the “Best Paper” honor, one of two that the STOC program committee awarded this year.

“The DEPIR problem has been unresolved for many years,” Mook says. “The fact that this technique solves the DEPIR problem and then actually extends much further to solve all of FHE is a great promise.”

Boosting the algorithms that power our favorite apps

Soheil Behnezhad has worked on dynamic graph algorithms for multiple years, and now he has some hardware for his efforts. His recent paper on the maximal matching problem in the dynamic setting, titled “Dynamic Algorithms for Maximum Matching Size,” won the Best Paper Award at SODA from a pool of nearly 600 submissions.

Dynamic graph algorithms are designed to handle changes to a graph without starting from scratch after each change. Behnezhad brings up the example of Google Maps, which uses dynamic graph algorithms to predict the best driving route. In this case, the graph is the road network; the algorithm calculates the fastest route based on traffic conditions and changes its output as new information becomes available.

Now consider an app like Uber, in which dynamic algorithms can quickly figure out which nearby driver would be the best match to pick you up. The algorithm considers where you are, how close the available drivers are, and even the type of car you’d like to ride in — and it keeps working while you wait. If traffic or ridership increases, the algorithm can adjust to find the driver who can take you to your destination the fastest.

Behnezhad had previously approached the maximum matching problem through sublinear time algorithms, which solves problems using less time than it would take to go through each item in the input data one by one. But he never imagined that he’d find an answer to the dynamic matching problem through the same concept.

“What’s special about the new dynamic matching algorithm is that it uses a sublinear time algorithm inside another algorithm, thereby finding a new connection between sublinear time algorithms and dynamic algorithms,” Behnezhad explains. “This kind of connection, at least for the matching problem, was never found before and paves the way for future research on this problem.”

For Behnezhad, this project was part of a broader practical problem: the need to account for massive amounts of data while designing algorithms. By using dynamic matching algorithms to better handle these huge datasets, Behnezhad’s approach could help companies spend time that’s proportional to the flux of new information, rather than solving problems from scratch.

“Traditional algorithms do not scale well with how much growth we’ve had with data. They assume that you have the whole input in one computer,” Behnezhad says. “However, in recent years, inputs have grown so large that we cannot even fit them into the input of the into memory of a single machine, and that has changed the paradigm of algorithm design.”

Subscribe to Khoury News

The Khoury Network: Be in the know

Subscribe now to our monthly newsletter for the latest stories and achievements of our students and faculty