Khoury researchers showcase record 28 works at CHI 2024

Author: Madelaine Millar

Date: 05.09.24

The most prestigious human–computer interaction conference in the world is taking place in Hawaii this month, and Khoury College’s researchers — along with their collaborators in the College of Engineering (CoE), the College of Science (CoS), the College of Arts, Media and Design (CAMD), and the Bouvé College of Health Sciences — are ready. To discover their work, which touches on everything from parrot socialization to 3D-printed braille to historical anatomical drawings, click on one of the linked summaries below, or simply read on.

For a schedule of Khoury researchers’ presentations and other activities, visit our CHI 2024 page.

Khoury researchers are bolded.

1) Sensible and Sensitive AI for Worker Wellbeing: Factors that Inform Adoption and Resistance for Information Workers

Vedant Das Swain, Lan Gao, Abhirup Mondal, Gregory D. Abowd (CoE), Munmun De Choudhury

Best Paper winner

Ever since the pandemic disrupted the ways employees work and employers monitor them, passive-sensing AI (PSAI) tools have gained attention for collecting and inferring information about workers’ productivity and well-being. While these insights can help workers and their employers to make healthier, more effective choices, conventional design approaches have also led to workers feeling watched or exploited and resisting the tools. Given that these tools are mandatory for many jobs, is it possible for workers to benefit from PSAI tools without giving up more privacy than they’d like?

The researchers asked 101 information workers to evaluate the benefits and harms of hypothetical PSAI tools, which were differentiated by the type of sensing taking place, whether they measured work alone or included broader activity, whether the tool looked for insight into their work performance or into their personal well-being, and who the tool shared its insights with.

“In our previous study, workers revealed tensions where they worried … these applications might become surveillance tools rather than personal aids,” Khoury distinguished postdoctoral fellow Vedant Das Swain said. “That made us wonder, how do we design these sensing AI to maximize value for workers while minimizing the harms?”

The team found that workers were more open to tools that tracked time or physical activity and reacted very poorly to tools that used cameras. Online language trackers were only viewed positively so long as they only sensed material related to work. Furthermore, the participants preferred tools generating insights into their well-being over insights on their performance, and they didn’t like those results to be shared with others. On average, participants were only willing to adopt 20 percent of the tools in the study, which indicates a need to design PSAI with more care toward workers.

2) Barriers to Photosensitive Accessibility in Virtual Reality

Laura South, Caglar Yildirim, Amy Pavel, Michelle A. Borkin

Honorable Mention winner

Virtual reality is an increasingly popular way to do everything from playing games to socializing with remote coworkers. Many people with photosensitive epilepsy have expressed interest in the technology, but because of the risks involved, there is little concrete information available about VR’s safety for epileptic people.

In interviewing five people with epilepsy or seizures triggered by photosensitivity, the researchers found a wide range of potential barriers to VR accessibility, although what posed a barrier depended on the specifics of the interviewee’s condition. There were physical concerns over VR headsets putting visual stimuli closer to the eyes while making it harder to get away quickly, or over a heavy headset injuring the wearer if they have a seizure and fall over. There were content barriers, such as the fact that VR stimulus is more unpredictable than many types of on-screen content, and that users without technical know-how might struggle to shut it down quickly in case of a triggering stimulus. There were also application-related barriers; for example, stress and exhaustion make people more sensitive to triggers, so a VR workplace might be riskier than a relaxing VR game.

“The consequences of encountering inaccessible content can be extremely severe for people with photosensitive epilepsy, and often it is possible to vastly improve the safety of digital platforms through relatively low-cost interventions,” Khoury doctoral student Laura South said. “People with photosensitive epilepsy have not historically been represented in computer science and human–computer interaction research, so it is important to me to center their voices in my research by conducting interviews and building systems based on their feedback.”

One central fix, the researchers wrote, would be to automatically test a program for flashing lights and prominently display warnings as needed. Ideally, the program would be customizable so that photosensitive participants could turn off display elements that triggered their condition. The team also recommended creating a lighter headset that was easier to remove, or including a means of notifying a friend that the user might need medical care.

3) Odds and Insights: Decision Quality in Exploratory Data Analysis Under Uncertainty

Abhraneel Sarma, Xiaoying Pu, Yuan Cui, Eli T. Brown, Michael Correll, Matthew Kay

Honorable Mention winner

The proliferation of useful, user-friendly data tools means two things: More people than ever are using data visualizations to make business decisions, and more people than ever are making beginner-level statistical errors and discovering “insights” that aren’t true. One common mistake is the multiple comparison problem, when multiple hypotheses are tested simultaneously, leading to confusion between signal and noise. But what if the data tools could visualize uncertainty?

To start out, the researchers gave 180 participants a set of 12–20 graphs that showed the profitability of 20 stores in a region, then asked them to predict whether the entire region of 200 stores was profitable. One third of the participants were provided only a scatterplot of data points, another third was given marks at the mean and at 50% confidence intervals, and the final third was given a probability density function, a bell curve showing how densely grouped data points were across the entire set. The team then compared the participants’ results against two statistical golems (data analyzers) — one controlling for the rate at which the wrong insight was drawn from multiple comparisons, and one not.

While the participants given only a scatterplot consistently came up with more untrue insights than the golem that did not control for the multiple comparison problem, both groups given uncertainty visualizations performed better, particularly those given probability density functions. This suggests that while participants are aware of the risk of drawing the wrong conclusions, they need uncertainty visualizations to avoid doing so.

“We are giving people the wrong tools for the job,” Khoury professor Michael Correll said. “If I’m asking you questions about uncertain information, it’s at least a little bit rude that I’m not giving you visualizations that communicate uncertainty in any direct way. Kind of like giving people peanut butter, jelly, bread, and a knife, and then acting surprised that they made a PB&J sandwich and not a filet mignon.”

4) A Design Framework for Reflective Play

Josh Aaron Miller, Kutub Gandhi, Matthew Alexander Whitby, Mehmet Kosa (CAMD), Seth Cooper, Elisa D. Mekler, Ioanna Iacovides

The emotional challenges offered by games make them ideal for transformative reflection — a cognitive or behavioral reappraisal that helps participants develop new insights, values, and behaviors. Research on the reflective potential of games exists already, but how do insights translate across disciplines, and how can that insight be transformed into guidelines that game designers can use?

To answer these questions, the researchers synthesized academic papers, blog posts, videos, individual games, and more, then devised a five-step player journey for reflective experiences in games. First, disruptions and discomfort in the game lead to slowdowns. These moments of stillness create space for the game to promote either a question that demands self-explanation (“why do you think that happened?”) or one where the player can develop and test hypotheses (“can you figure out why that happened?”). Then, when players revisit the experience, the game can prompt reflection, break the fourth wall, or encourage discourse with other players.

“Games are a wonderfully powerful medium for reflection, for getting their players to reflect on themselves and the world around them,” Khoury doctoral student Kutub Gandhi said. “To bolster the accessibility of the framework for game designers, we simplified it into a deck of cards. Each card is a pattern in the framework, and designers can pull on these cards for inspiration as they build their games.”

While mainstream games and serious games (those designed for purposes other than entertainment) usually aren’t well suited to transformational reflection, experimental games — where gameplay conventions are looser — often are. To be a good fit, a game should value questions over answers, clarity over stealth, disruption over comfort, and reflection over immersion.

5) An Exploration of Learned Values Through Lived Experiences to Design for BIPOC Students’ Flourishing

Laveda Chan (CoS), Dilruba Showkat, Alexandra To (+CAMD)

Much has been written about the negative, frustrating, and dehumanizing experiences that Black and Indigenous people of color (BIPOC) often face at predominantly white institutions (PWIs), and how that harm can be addressed. However, far less study has been devoted to the positive and fulfilling experiences of BIPOC students, and how to best cultivate and support those experiences.

To answer those questions, the researchers interviewed 17 BIPOC students who attended or had recently graduated from PWIs. They identified four values that the students considered essential to their success: resistance to adversity, aspiration for their futures, gratitude for support, and pride in their accomplishments and growth. They also identified specific ways those values manifested. Their findings showed that when BIPOC students seized opportunities and engaged in activities for connection and personal growth, they could counteract racial barriers at their PWIs — highlighting the importance of centering students’ experiences of joy, love, community building, and racial identity development.

“These positive aspects have been linked to persistence and satisfaction throughout students’ enrollment in college,” Khoury doctoral student Dilruba Showkat said. “Our aim is to empower BIPOC students by making space for the full spectrum of their experiences in human-centered design efforts.”

The researchers identified three critical elements to consider when designing systems to support BIPOC students’ emotional well-being at PWIs: pleasure, personal significance, and virtue. In practical terms, that means that system designers should try to create experiences that uplift those learned values and activities and are potentially either enjoyable and relaxing, meaningful and fulfilling, or that promote behavior like open-mindedness.

6) Bitacora: A Toolkit for Supporting NonProfits to Critically Reflect on Social Media Data Use

Adriana Alvarado Garcia, Marisol Wong-Villacres, Benjamín Hernández, Christopher A. Le Dantec (+CAMD)

When Adriana Alvarado Garcia and Accelerator Lab Mexico developed the Bitacora toolkit, they did so to help nonprofits who were interested in using Twitter (now called X) data to explore and refine their ideas for social media. Guided exploration and reflection, they hoped, would help users discover that Twitter is often a costly or infeasible tool for coordinating on-the-ground crisis response. For example, during the early part of the COVID pandemic, could listening to conversations on Twitter have helped NGOs to figure out what specific communities needed the most, and better targeted the delivery of things like healthcare, childcare, and food?

“Social media data can provide a rich, real-time view into how communities are identifying and responding to a crisis. This kind of insight can be incredibly valuable for nonprofit organizations who want to support grassroots emergency response,” said Christopher Le Dantec, professor of the practice and the director of digital civics at Khoury College and CAMD. “However, understanding how to effectively select, clean, and make sense of social media can be a challenge.”

Le Dantec, along with Garcia and other colleagues, researched how ten practitioners at three nonprofits interacted with the Bitacora toolkit in the real world. The toolkit takes users through three iterative steps: The first is defining their topic within a limited set of keywords and date ranges and gathering data on the existing Twitter discussion on the topic. The second is defining and documenting the basic information that is known to be true, and the formats in which that information is conveyed. The third is qualitatively analyzing examples to determine how the Twitter community conceptualizes the problem, and map out how different ideas are seen as relating to one another.

While the toolkit did slow practitioners down and examine the problems they would encounter, it did so in unexpected ways. For example, when users discovered how difficult it was to find keywords that would return usable results, many adjusted by altering elements of the topic to fit Twitter’s capabilities, rather than reflecting on how changing the topic would impact their organization’s goals. Practitioners also found completing the entire toolkit to be too time-consuming for emergency response situations; instead, it could be used as a guided learning tool to teach practitioners about the limits of Twitter’s capabilities, in advance of the crisis situations where its use might be suggested.

7) Building LLM-based AI Agents in Social Virtual Reality

Hongyu Wan, Jinda Zhang, Abdulaziz Arif Suria, Bingsheng Yao, Dakuo Wang (+CAMD), Yvonne Coady, Mirjana Prpa

Non-player characters (NPCs) are a staple of most video games, and though their dialogue ranges from heartfelt to quippy to famously stilted, they have always been limited to a small range of set phrases. In social gaming platforms (such as VR Chat), NPCs’ ability to remember players’ data over time and provide responses contextualized by previous interactions is crucial. So, the researchers wondered, could text-generating large language models (LLMs) remember and generate dialogue for video game NPCs, and if so, what info would the AI models need?

Using an NPC delivering dialogue generated by GPT-4, the researchers tried inputting different volumes and amounts of information about the NPC characters and their environments. They applied three metrics to test the quality of the generated NPC replies:

- Did the dialogue-generating system function correctly?

- Did another LLM think the response was generated correctly?

- Does a human observer think the LLM’s response could be human?

The researchers found that the ideal amount of prompt information was three base observations (things like their name, character details, and future plans) and five context observations (things going on around the NPC). With these inputs, their system returned plausible responses and observations 95 percent of the time.

“Social VR platforms … are focal points where complex social interactions occur between players,” Khoury professor Mirjana Prpa said. “It became a timely topic to … understand the role of LLM agents in social VR worlds and how they should be utilized and even regulated so that the values of human interaction in VR are preserved and enriched.”

8) Call of the Wild Web: Comparing Parrot Engagement in Live vs. Pre-Recorded Video Calls

Ilyena Hirskyj-Douglas, Jennifer Cunha (NU affiliated researcher), Rebecca Kleinberger (+ CAMD)

Khoury–CAMD assistant professor Rébecca Kleinberger and her colleagues, the University of Glasgow’s Ilyena Hirskyj-Douglas and Northeastern’s Jennifer Cunha, caused a media flurry last year with their research into the positive social impacts of video calls for lonely parrots. But given the difficulty of coordinating the birds’ calendars, the researchers wondered if recorded footage would provide the same enrichment using fewer resources.

To answer this question, the researchers tested nine parrots’ engagement with prerecorded versus live video calls. After being trained to use the systems, the birds were given opportunities to call each other in three-hour windows, three times a week, for eight weeks. Then the experiment was repeated, but the birds’ calls were directed to pre-recorded footage of other parrots instead of live footage of one another. After both phases of the experiment, the birds’ caregivers were asked for their opinions and observations.

On average, the birds spent four times as long on live video calls than on prerecorded ones. They also initiated more live calls, and the calls lasted for longer. Caregiver feedback suggested that this discrepancy was because they could tell when the calls weren’t live; the prerecorded birds didn’t respond to their stimuli, and so many of the parrots found it unfulfilling.

9) Counting Carrds: Investigating Personal Disclosure and Boundary Management in Transformative Fandom

Kelly Wang, Dan Bially Levy, Kien T. Nguyen, Ada Lerner, Abigail Marsh

Transformative online fandoms are online spaces where primarily queer and female users creatively iterate on a piece of media and share their creations with a community of other fans. In these communities, it is common to proactively and publicly disclose vulnerable personal information, a practice that existing models of privacy, which hinge on withholding such information from strangers and developing intimacy only with close friends, can’t explain this behavior. So what’s going on?

To find out, the researchers studied 5252 fan Carrds — personal websites that provide information about their owners and are usually linked in the bios of social media pages. They found that Carrds tended to cover three categories: identity elements like age, gender, orientation, and disability status; Do Not Interact (DNI) criteria that listed who the user did not want following them on social media; and Before You Follow (BYF) disclosures that explained the user’s etiquette around things like content warnings and blocking. To users outside the community, these Carrds may appear nonsensical or even unsafe. Common DNI criteria like “no homophobes” are functionally unenforceable, and sharing vulnerable personal characteristics like mental health diagnoses or transgender identity with strangers can carry real risk.

But just because existing models don’t explain this behavior doesn’t mean that the behavior doesn’t serve a function, which is why the researchers propose developing a new model of collective privacy based on developing trust cyclically at the community level. As users share personal information and boundaries, the community develops inclusive values and norms, resulting in a safe, trusting environment where users can explore and affirm identities they may not be able to safely disclose offline.

“It brings a lot of new ideas into the discussion of what we mean by privacy, which we can take for granted sometimes when we focus on a particular type of privacy problem like keeping sensitive data a secret,” Khoury doctoral student Kelly Wang said. “I think this conception of privacy especially reflects the lived experiences of users on the margins … who need to self-censor with certain people but find that that’s also counterproductive to their goals, like participating in community.”

10) Country as a Proxy for Culture? An Exploratory Study of Players in an Online Multiplayer Game

Alessandro Canossa, Ahmad Azadvar, Jichen Zhu, Casper Harteveld (+CAMD), Johanna Pirker

Playing video games is a pastime that crosses the borders of country and culture to unite players who would never otherwise encounter each other. But do those borders impact how players play, communicate, and collaborate? And is grouping players by country a legitimate approach when attempting to research cultural differences?

The researchers examined these questions in the first large-scale exploratory study of its kind. They surveyed players of Rainbow Six Siege, an economically accessible, online, multiplayer, first-person shooter game with a dedicated global fanbase and a range of viable play styles. The survey covered demographics, habits like how much time or money someone spent on the game, technical skill and player ratings, and psychographic questions on players’ impulses and subconscious attitudes. In total, 14,361 players from 107 countries completed the survey.

The team then scored how much two players from the same country would differ on average in their answers and attitudes (within-country variance) and how much two players from different countries would differ (between-country variance). They found that between-country variance was greater than within-country variance by a small but statistically significant amount. This indicates that future studies could group video game players into study groups based on their country of origin.

11) Ellie Talks About the Weather: Toward Evaluating the Expressive and Enrichment Potential of a Tablet-Based Speech Board in a single Goffin’s Cockatoo

Jennifer Cunha (affiliated NU researcher), Corinne C. Renguette, Nikhil Singh, Lily Stella, Megan McMahon (CoS), Hao Jin, Rebecca Kleinberger (+CAMD)

It’s natural for humans to wonder what our pets might say if they could talk. In hopes of making that dream a reality, some animal owners have turned to human-focused augmentative and alternative communication devices (AACs), such as tablets with buttons for different words. But because we don’t know what the birds are trying to say, it’s difficult to determine if the tablets allow them to communicate effectively.

To gain insight into parrot self-expression using AACs, this research team spent seven months gathering data on how a Goffin’s cockatoo named Ellie used an AAC tablet. The tablet had 148 words that the bird could access by navigating several branching sub-menus, which were organized based on topics like eating, drinking, and playing. The team recorded nearly 4,700 button presses, with the goal of determining whether they were random or indicative of complex choices. Additionally, the researchers built a schema to look at possible corroboration of button presses.

The team was able to corroborate the bird’s selections about 92 percent of the time, an impressive rate. Furthermore, they could not match the experimental results with data generated by a mathematical model, further implying that Ellie’s choices of words were not purely random or based on things unrelated to communication, like button location or brightness.

12) Engaging and Entertaining Adolescents in Health Education Using LLM-Generated Fantasy Narrative Games and Virtual Agents

Ian Steenstra, Prasanth Murali, Rebecca B. Perkins, Natalie Joseph, Michael K. Paasche-Orlow, Timothy Bickmore

Educating adolescents about their health in an engaging way is critical to ensure they develop lifelong healthy habits. Video games are famous for holding tweens’ attention; could they be an effective, responsive, and affordable way to provide health education?

Using a large language model (LLM) and virtual agents, the researchers produced two short, interactive health interventions to teach 9-to-12-year-old about the HPV vaccine. The first was a more traditional pedagogical agent, which simulated a counseling session in a doctor’s office and asked quiz questions about HPV vaccination without any kind of storyline, points, or progression.

The second intervention was called “The Quest for the HPV Vaccine.” Players helped Evelyn the Adventurer to find Doctor Clara and the HPV vaccine to protect a village from HPV-related diseases. The game took players through stages like a glowing mushroom forest, the mountains, a cave, and the village itself, and had them answer questions posed by various fantasy creatures. Correct answers gained points that helped the player move to the next level and progress the story.

Thirteen adolescents completed the interventions under parent supervision, then the players and their parents provided feedback. As the team hypothesized, both interventions upped participants’ knowledge and acceptance of both HPV vaccine and vaccination in general, and the narrative game was more engaging than the pedagogical agent. However, the narrative game did not outperform the pedagogical agent in improving players’ intent to seek vaccination. The team also discovered that LLMs were an effective way to quickly formulate games like this, but it was important to keep a human involved to catch the falsehoods that the AI generated.

“Since the COVID-19 pandemic, there has been a rise in vaccine denial or skepticism for other vaccines than just COVID-19; this includes the HPV vaccine as well,” Khoury doctoral student Ian Steenstra said. “By intervening on the child or adolescent, by providing them with knowledge about HPV and HPV vaccination, we hope to spark vaccination conversations in their families.”

13) From Provenance to Aberrations: Image Creator and Screen Reader User Perspectives on Alt Text for AI-Generated Images

Maitraye Das, Alexander J. Fiannaca, Meredith Ringel Morris, Shaun K. Kane, Cynthia L. Bennett

AI image-generating technology has grown exponentially in capability and popularity since the release of DALL-E in 2021. For visually impaired and blind people who use screen readers to interpret the internet, though, the fact that image generators don’t produce alt text — a short description of the image that can be read aloud — means the new technology is frustratingly inaccessible.

The researchers gathered 16 screen reader users, then asked 16 AI image creators to generate five images apiece and write alt text for each one. Then, both groups evaluated four examples of alt text for the same image: the original prompt, the creator’s alt text, alt text written by the researchers, and alt text generated automatically by a vision-to-language (V2L) model.

Unsurprisingly, the four alt texts had different characteristics. The creators’ and researchers’ texts tended to be longer and more detailed, while the V2L text was short and terse. The original prompts provided passable descriptions of some images but were often too abstract or generic to give clues about the image’s content or composition, and frequently contained unwieldy jargon. They were also sometimes inaccurate if the creator included elements in the prompt that the AI didn’t fully render, indicating that prompt text was not a good substitute for alt text.

“We want to call for provenance, explainability, and accessibility researchers to work together to address this critical issue as text-to-image models continue to evolve,” Khoury and CAMD professor Maitraye Das said.

Das and her team also recommend that in contrast to other forms of alt text, alt text for AI images should clearly indicate that the image was AI-generated, whether it contains any of the aberrant features and bizarre renderings that AI images have become known for, detail what the creator intended the misgenerated element to be, and describe the style and ambiance of the image using objective descriptions.

14) GigSousveillance: Designing Gig Worker Centric Sousveillance Tools

Maya De Los Santos (CoE), Kimberly Do, Michael Muller, Saiph Savage

From rideshares and food delivery apps to online platforms like Mechanical Turk and Upwork, gig work has become incredibly common. Unfortunately, gig workers’ status as independent contractors, plus the physical isolation they often face, means that they are disproportionately vulnerable to being tracked and exploited using everything from webcams to mouse trackers. Drawing on a concept from 20th century French philosophy called sousveillance, the researchers, led by College of Engineering undergraduate Maya De Los Santos, investigated whether gig workers could ensure accountability, empowerment, and care for themselves by watching their employers back.

“Gig workers are often denied access to their own data regarding their work, which leaves them vulnerable to platform manipulation like data theft and labor coercion. To combat this, our stance promotes the empowerment of workers through access to their own data, enabling them to resist the unfair practices of their bosses,” said Saiph Savage, Khoury professor and director of Northeastern’s Civic A.I. Lab. “This paper delves into workers’ imaginations and the potential impact of such technologies on their professional lives.”

The researchers sought gig workers’ insights into their use of — and attitudes toward — sousveillance, as well as the needs that future sousveillance tools might address. They found that workers tended to watch the organizations requesting their labor in three ways: by researching their reputations online, noting other workers’ experiences through avenues like independent review boards, and citing a lack of available information on an organization as a red flag. While workers appreciated that sousveillance helped them seek better-fitting gigs, communicate with their employers, and avoid scams, they had reservations about collecting data on their employers, breaking laws or damaging relationships, and putting themselves in emotionally challenging situations by learning more than they wanted to know.

Based on worker feedback to three potential tool designs — a well-being tracker, a requester review board, and an invisible labor tracker — the team developed several design recommendations to make sure future tools are empathetic and responsive to workers’ needs. For example, they found that a tool aggregating and filtering information about potential employers would likely be welcome; meanwhile, a tool tracking invisible labor and notifying workers when they were making less than the minimum wage would be demoralizing and unhelpful because there was little the workers could do about it. Workers also wanted their sousveillance tools to be user-friendly, and to carry a reputation as professional, transparent, and mutually beneficial for employees and employers.

15) How Beginning Programmers and Code LLMs (Mis)read Each Other

Sydney Nguyen, Hannah McLean Babe, Yangtian Zi, Arjun Guha, Carolyn Jane Anderson, Molly Q. Feldman

Many a hand has been wrung in recent years about generative AI replacing students learning how to code. But most of the research into coding large language models (LLMs) has looked at users who are already experts. How — and how effectively — do beginning coders work with generative AI?

The researchers had 120 students who had completed introductory computing courses use the Codex text-to-code LLM to solve a series of tasks and mediated the students’ interaction with the LLM through a character called Charlie the Coding Cow. Based on examples of correct inputs and outputs, students wrote natural language descriptions of what they wanted their code to do, then gave it to Charlie, who returned Codex’s automatically generated code. While participants could edit their prompts, they could not edit the code itself; when they were satisfied with the return, participants told Charlie whether his answer was correct and whether they would have written the code themselves.

The researchers found that the students ran into issues getting Charlie to understand what they wanted. Additionally, errors in the generated code and limits in their own knowledge prevented them from completing the tasks. Just over half managed to generate correct code, and only a quarter could do it on the first attempt. The students also misunderstood what Charlie was, generally thinking of him like a dictionary that would add the right code if the right keyword was used, so they tended to edit their prompts by adding more details. Students who correctly identified Charlie as a model that generates the most statistically probable next output tended to use him more successfully, as did students who had more experience with computer science.

“My research group has been developing large language models trained on code, and it is quite remarkable what they can do. But, since they are trained using code that is almost entirely written by professional programmers, we started to wonder if beginners would be able to use code LLMs as effectively as professionals can,” Khoury professor Arjun Guha said. “We concluded that beginners and code LLMs frequently miscommunicate with each other. Students tend to write prompts that LLMs do not understand, and LLMs respond with code that students don’t understand.”

Based on the team’s findings, generative AI’s goal of making coding accessible to everyone, regardless of technical experience, is still a ways off. For now, a strong foundation in CS fundamentals remains critical for student success.

16) It’s a Fair Game, or Is It? Examining How Users Navigate Disclosure Risks and Benefits When Using LLM-Based Conversational Agents

Zhiping Zhang, Michelle Jia, Hao-Ping (Hank) Lee, Bingsheng Yao, Sauvik Das, Ada Lerner, Dakuo Wang (+CAMD), Tianshi Li

Large language models (LLMs) like ChatGPT can be a great way to research topics in a simple, conversational way. But as the models grow more common in sensitive areas like healthcare, financial planning, and counseling, they raise unique privacy concerns that users may not fully grasp.

The researchers analyzed 200 chat logs from ChatGPT and interviewed 19 users to better understand their behavior and mental models of the tool. They found that many users disclosed sensitive personal information like their name, email address, phone number, and passport number, and sometimes disclosed other people’s personal information as well. While many users concerned with data misuse chose not to reveal certain details, they also believed that privacy risks were a necessary trade-off to get the model to work effectively, and that most of that personal data was likely already compromised anyway.

Many participants didn’t quite understand how the models functioned, which obscured the real privacy risks. Chatbots come with two main privacy concerns: traditional data breaches and memorization risks, which is when a model returns private information that was used as training data. Unless a user understood that the LLM used statistics to spit out the most probable next word in a sequence — and used their inputs as training data to learn what the most likely next word would be — they weren’t likely to understand memorization risks.

Furthermore, even users who understood the memorization risks were unlikely to understand how to protect their privacy. On ChatGPT, for a user to opt out of having their inputs used as training data while still retaining their own personal chat history, they had to submit a form through an FAQ article that none of the participants could find without help.

“Policymakers interested in regulating generative AI technologies may find our findings useful for rulemaking, particularly for health care and education,” Khoury professor Tianshi Li said. “Companies that build LLM-powered services can refer to our design recommendations to improve their privacy designs. In fact, we noticed ChatGPT recently implemented a new privacy feature called ‘Temporary Chat,’ which is just one of the features requested by our participants and reported in this paper.”

17) Malicious Selling Strategies in Livestream E-commerce: A Case Study of Alibaba’s Taobao and ByteDance’s TikTok

Qunfang Wu, Yisi Sang, Dakuo Wang (+CAMD), Zhicong Lu

Although livestream shopping — the modern hybridization of TV shopping channels like QVC and social media influencing on platforms such as TikTok and YouTube — is still in its nascent form in the United States, the format has exploded in China, especially since the pandemic. While livestream shopping offers novel advantages like a social shopping experience and a wider audience for small businesses, it also creates novel challenges. Streamers may manipulate, coerce, or trick their viewers into buying products, a phenomenon known as malicious selling.

READ: Inside the deceptive techniques of TikTok’s livestream sellers

To investigate, the researchers analyzed 40 livestreams across the shopping platforms Taobao and TikTok, then interviewed 13 shopping livestream viewers. They found that malicious selling strategies fall into four categories:

- Restrictive strategies, which told viewers to purchase or subscribe to get a discount or reward

- Deceptive strategies, in which streamers falsified information like how scarce or popular a product was

- Covert strategies, in which streamers made products more appealing using subtle strategies like special lights

- Asymmetric strategies, tactics that created subtle emotional pressures (e.g. baiting customers with a cheaper product and then pressuring them to buy a pricier one)

Furthermore, the researchers found that certain features of platform design enabled or encouraged restrictive and deceptive strategies, covert and asymmetric strategies relied more on the salesperson’s ability, and asymmetric strategies could be hard for viewers to spot. The study’s livestream viewers expressed several frustrations, such as how difficult it was to see exactly what they were buying, research products, and gauge their popularity.

18) No More Angry Birds: Investigating Touchscreen Ergonomics to Improve Tablet-Based Enrichment for Parrots

Rebecca Kleinberger (+CAMD), Jennifer Cunha (NU affiliated researcher), Megan McMahon (CoS), Ilyena Hirskyj-Douglas

As she’s studied the social behavior and tech interactions of various animals, including pandas, orcas, and lonely parrots, Khoury–CAMD assistant professor Rébecca Kleinberger has become acutely familiar with just how unsuited tablet touchscreens are designed for beaks, paws, tongues, and talons, and how app interfaces aren’t designed for users whose face needs to touch the screen with every tap. So, how do animals — and parrots in particular — interact with touchscreen technology?

Kleinberger and her team at the INTERACT Animal Lab, in collaboration with Glasgow University, trained 20 pet birds to tap a relocating red circle on a custom designed Fitt’s touch app, in a study that was covered by the New York Times. They analyzed the parrots’ results, videos of their interactions with the app, and their caregivers’ feedback. The parrots ranged in size and species, but all had previous experience interacting with tablets.

Aside from the obvious physical differences between parrots and humans — like the parrots being prone to “multi-taps” or dragging their taps across the screen — there were also more subtle differences. For instance, Fitt’s Law, which shows a linear relationship between the distance a digit moves and the time that movement takes, did not apply to parrots. Instead, the birds retreated from the screen after each touch, meaning both small movements and large ones took longer.

In addition to designing systems that respond correctly to several long, soft taps instead of one firm one, the researchers recommended allowing elements like button size to be customized based on the size and personality of the bird. They also encouraged designers to keep human interaction involved; several caregivers reported that the most rewarding part of taking part in the study was the opportunity to bond with their birds.

19) Patient Perspectives on AI-Driven Predictions of Schizophrenia Relapses: Understanding Concerns and Opportunities for Self-Care and Treatment

Dong Whi Yoo, Hayoung Woo, Viet Cuong Nguyen, Michael L. Birnbaum, Kaylee Payne Kruzan, Jennifer G. Kim, Gregory D. Abowd (CoE), Munmun De Choudhury

For people with schizophrenia, serious relapses can entail self-harm and suicide. Catching and treating relapses early can prevent this sort of serious harm, but it’s hard for clinicians to constantly monitor patients for aberrant behavior. But could AI help, and how would schizophrenic people feel about that?

To answer this question, the researchers interviewed 28 people with schizophrenia about the possibility of an AI using their social media behaviors to alert them to potential relapses. Because one symptom of a relapse is faltering self-perception, many respondents liked the idea of an external reference point against which to check their behavior; they also liked that the data could help spark important conversations with the people in their support networks.

They also had concerns, though, ranging from security and privacy qualms to worries that the feeling of being monitored could exacerbate paranoid delusions. The researchers also discovered that the algorithm and patients sometimes disagreed on what they considered a relapse, highlighting the importance of using the data to help inform schizophrenic people’s health decisions, rather than assuming the algorithm knows best.

Based on those perspectives, the researchers developed a generally well-received prototype AI called Sompai, which used Facebook posts to flag abnormal user behavior in line with a schizophrenic relapse. When Sompai spotted something concerning, it sent the user a gently worded message and reminded them to sleep, take their medications, and practice good hygiene. The messages could also share Sompai’s accuracy rate and the specific posts that had triggered the message and offered to share that information with the user’s chosen support system with a single click.

20) Profiling the Dynamics of Trust & Distrust in Social Media: A Survey Study

Yixuan Zhang, Yimeng Wang, Nutchanon Yongsatianchot, Joseph D. Gaggiano, Nurul M. Suhaimi, Anne Okrah (CoE), Jacqueline Griffin (CoE), Miso Kim (CAMD), Andrea G. Parker

Digital communication presents amazing possibilities, but also serious new harms. In particular, misinformation on social media undermines trust and is difficult to counteract; doing so requires a nuanced understanding of the multifaceted dynamics of trust and mistrust online.

To develop that understanding, these researchers surveyed almost 1,800 Americans about their trust in social media and their experiences with anti-disinformation features. They determined that trust and distrust are not polarized opposites, but often exist simultaneously. They also discovered that trust and distrust behaviors vary significantly based on demographic characteristics, and on the platform on which the behaviors occur. The researchers conclude that the misinformation interventions which have gained popularity in recent years do heighten awareness of misinformation on social media and bolster trust in the platforms, but were not enough to alleviate users’ underlying distrust.

21) ReactGenie: A Development Framework for Complex Multimodal Interactions Using Large Language Models

Jackie (Junrui) Yang, Karina Li, Daniel Wan Rosli, Shuning Zhang, Yuhan Zhang, Yingtian Shi, Anisha Jain, Tianshi Li, Monica Lam, James A. Landay

Imagine you’re feeling hungry, so you open up UberEats. Tacos sound good — “reorder my last meal from this restaurant” you say, tapping on the Taco Bell logo. UberEats, predictably, does nothing, because it isn’t coded to support complex, multimodal commands that combine spoken words with touchscreen inputs. Coding these interactions has always been tricky and labor-intensive.

The researchers developed ReactGenie, a programming framework that uses object-oriented state abstraction to allow developers to quickly and cheaply encode multimodal commands. If developers’ state code is clearly defined, they can add annotations with examples of the desired inputs and outputs for specific objects. ReactGenie then uses those examples to train a large language model which turns the user’s natural language into a domain-specific language called ReactGenieDSL, which the program interprets as commands.

The framework is extremely developer friendly. When the team gave ReactGenie to 12 developers with experience in React, all 12 built applications within two and a half hours. ReactGenie is also highly accurate; when 50 users tested apps using multimodal commands developed using ReactGenie, the system correctly parsed 90% of commands within its scope.

“Imagine converting all current websites and mobile apps into a smart assistant, allowing you to control them through natural language as if you were talking to a person. ReactGenie brings us very close to achieving this in reality … this work has the potential to bring multimodal interactions into mainstream app development,” Li said. “ReactGenie is a revolutionary idea, and I’m excited to be part of the team.”

22) Rethinking Human-AI Collaboration in Complex Medical Decision Making: A Case Study in Sepsis Diagnosis

Shao Zhang, Jianing Yu, Xuhai “Orson” Xu, Changchang Yin, Yuxuan Lu, Bingsheng Yao, Melanie Tory, Lace M. Padilla (+CoS), Jeffrey Caterino, Ping Zhang, Dakuo Wang (+CAMD)

While several AI tools for medical diagnostics have performed well in research settings, they often fail to live up to their promise in practice because of their inability to collaborate well with human users — sometimes even creating a “human–AI competition.” This phenomenon was reaffirmed by the six clinicians interviewed in this study, who insisted that the AI sepsis diagnosis tool implemented in their hospitals was incompetent, unhelpful, or an outright annoyance that added to their workload.

To determine what a helpful AI–physician collaboration might look like, the researchers heard the clinicians’ concerns — including slow and vague predictions, a fatiguing number of false positives, and nonactionable alerts — and designed SepsisLab. The new tool displayed a patient list, patients’ personal info, recommended tests, sepsis risk scores (complete with historical data and uncertainty visualizations), and counterfactual explanations that explain why other potential diagnoses wouldn’t fit. SepsisLab predictions were designed to reach the same diagnostic conclusions as existing tools, but involved themselves in developing and refining physicians’ hypotheses and helping them gather data, rather than trying to hand them results. All six clinicians had significantly more positive feedback about the new tool.

“Most of today’s AI systems center around what AI can and cannot do, and design human experts like doctors to be the guardrail for AI in case its prediction is wrong. This is what we call human-in-the-loop AI architecture, and it often fails in real-world deployment because human users quickly abandon such an AI system,” Khoury and CAMD professor Dakuo Wang said. “We propose an AI-in-the-loop architecture that can center the AI system around the human’s workflow.”

23) Social Justice in HCI: A Systematic Literature Review

Ishita Chordia, Leya Breanna Baltaxe-Admony, Ashley Boone, Alyssa Sheehan, Lynn Dombrowski, Christopher A. Le Dantec (+CAMD), Kathryn E. Ringland, Angela D. R. Smith

Over the past decade and a half, and especially after 2020, human–computer interaction (HCI) studies have increasingly focused on interactions between computers and marginalized or disenfranchised communities. However, what social justice means, and how it’s framed and measured, varies significantly between research teams. That’s why Christopher Le Dantec, professor of the practice and the director of digital civics at Khoury College and CAMD, got together with some colleagues and reviewed 124 HCI papers published between 2009 and 2022 that related to social justice.

The team found that while researchers carefully considered how justice could be applied, they rarely defined the concept of justice itself. Instead, most authors defined the harms that required social change to receive justice. The most attention was paid to recognition harms — which impact one’s ability to engage in society — and autonomy harms, which inhibit self-actualization. Little attention was paid to cultural or environmental harms, the studied technologies’ benefits, or who those benefits were conferred on.

“As the field of HCI continues to consider the role of computing systems in either perpetuating or confronting systems of inequality and oppression, we need to have a better understanding of what we as an intellectual community mean with the term ‘social justice,’” Le Dantec said.

In addition to the definitional shortcomings, the last decade-plus of HCI social justice research paints a picture of a field with good intentions, but which continues to limit itself by approaching social justice with a deficit model that centers repairing damage, rather than focusing on promoting joy, wholeness, and care. To bridge this gap, future research should consider what the harms and benefits of the topic are, who is harmed and who benefits, the sources of those harms and benefits, possible sites of intervention, and possible tools for intervention.

24) ‘Something I Can Lean On’: A Qualitative Evaluation of a Virtual Palliative Care Counselor for Patients with Life-Limiting Illnesses

Teresa K. O’Leary, Michael K. Paasche-Orlow, Timothy Bickmore

For terminally ill patients, palliative care can make the end of their lives as easy and comfortable as possible. But because physicians are often reluctant to refer patients to palliative care, and because providers are in short supply, many patients don’t receive its benefits until late in the trajectory of their disease. Could digital tools like an embodied conversational palliative care counselor help to bridge the gap?

The researchers attempted to answer this question by building a prototype for a tablet-based palliative care counselor, which was tested by 20 patients for six months. The patients expressed that the system had helped to bring their health to the forefront of their minds and keep them accountable about healthy behaviors like exercise and meditation, while also providing relief from the stress of caring for their health. Socially isolated patients said they appreciated the care and connection the system offered them, while socially connected patients appreciated that they didn’t feel like they had to give a positive spin to their experiences like they often did with their human support systems.

The patients also provided critical feedback that the researchers incorporated into design recommendations; in particular, patients wanted future versions to facilitate communication with their medical providers and be better personalized to their unique diagnoses, life situations, and personal preferences.

25) Stairway to Heaven: A Gamified VR Journey for Breath Awareness

Nathan Miner (CAMD), Amir Abdollahi (CoE), Caleb P. Myers, Mehmet Kosa (CAMD), Hamid Ghaednia, Joseph Schwab, Casper Harteveld (+ CAMD), Giovanni M. Troiano (CAMD)

Studies have shown that mindful breathing techniques help people manage stress, anxiety, and depression while promoting feelings of peacefulness and calm. For beginners especially, though, it can be tricky using the abdomen to breathe deeply and to sustain attention for long enough to reap the benefits. Could an interactive VR experience help?

To find out, these researchers developed Stairway to Heaven, a virtual reality experience to support mindful breathing. While being measured by a respiratory sensor, participants progressed through a series of calming VR forest locations to the top of a hill. Along the way, a meditative audio guide instructed participants about their breathing, and a green bar in the display gave users feedback about whether they were breathing deeply enough to progress.

Participants generally found the experience usable, relaxing, and engaging, and their breathing rates slowed by an average of 57% over the course of about 15 minutes. Participants’ differences impacted how they felt the training went; for instance, people with previous meditation experience sometimes struggled to split their attention between the environment and their breath, while people experienced with VR games sometimes took time to slow down and relax.

That some users wanted Stairway to Heaven to be more relaxing, while others wanted more engagement, highlights the difficulty of designing a system that promotes mindfulness for everyone. However, the fact that most participants enjoyed themselves and reached the target therapeutic breathing rate for at least some of the training reflects positively on the VR technology’s potential.

“Mindful attention to breathing, specifically deliberately engaging in slow breathing using the diaphragm … is a simple yet powerful way to reduce stress and improve feelings of well-being,” CAMD researcher Nathan Miner said. “Our research stands out for its unique approach of using virtual reality to support and engage users in self-regulating their breathing.”

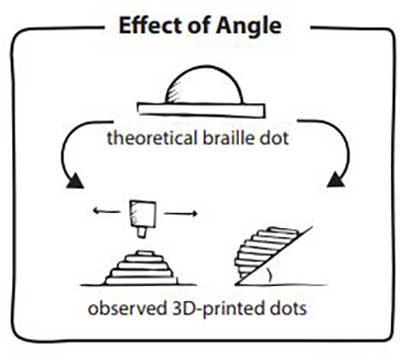

26) The Effect of Orientation on the Readability and Comfort of 3D-Printed Braille

Eduardo Puerta, Tarik Crnovrsanin, Laura South, Cody Dunne

3D printing offers an affordable way to produce braille for people who are blind or have low vision. One of the most common types of 3D printing, called FDM, works by stacking layers of molten plastic to build up each braille dot. The layers are always stacked horizontally, but the designers can adjust the angle by printing the model on a slant, ranging from 0 to 90 degrees.

To determine which angles provide the quickest, most comfortable, and easiest reading experience, the researchers printed sample phrases at seven different angles and had twelve Braille users evaluate them against a control that used traditional embossing. The team measured users’ reading speed and solicited feedback about the ease of discerning letters and words.

All three metrics improved as the print plate angle increased, with a noticeable difference at 60 degrees and above. The comfort and discernment available at 75-degree and 90-degree print angles matched that of the embossed control, and the average reading speed was better than for other angles.

Printing at high angles, like 90 degrees, provides the best braille. However, printing at this angle is technically tricky because printing any model vertically can leave “overhangs,” or layers with nothing supporting them underneath. Therefore, the authors propose a 75-to-90-degree range that produces the ideal braille for the FDM technique and gives designers flexibility to avoid overhangs. If a higher angle can’t be used, the researchers also found that sanding down braille printed at lower angles could minimize discomfort and discernment issues.

27) Thinking Outside the Box: Non-Designer Perspectives and Recommendations for Template-Based Graphic Design Tools

Farnaz Nouraei, Alexa Siu, Ryan Rossi, Nedim Lipka

Design templates in tools like Canva and Adobe Express can be a great way for nondesigners to quickly create beautiful posters, fliers, and cards. But working from a template can also limit a person’s creativity and frustrate them when their media assets don’t fit into the template well.

That’s why the researchers had ten students design posters advertising a clothing sale, first by using anything they liked from a selection of nine templates, then based off a template assigned by the team. Participants tended to fall into two camps: those who preferred to pick and customize a template they liked, and those who preferred to mix and match elements from several templates into an original design. Today’s template interaction designs tend to support the pick-and-customize method, which can hinder and frustrate mix-and-match users. Furthermore, some users were frustrated when they found their designs subpar compared to the professional-looking template they had started with, though they appreciated that the templates helped them avoid decision paralysis. Users tended to accept the creativity limits of the templates as a trade-off for improved productivity.

To maximize benefits and minimize frustrations, the team recommended that future tools provide more visual decision-making support (including how to select the best template), add features like a mood board that encourage exploration and iteration, and identify and automate rote tasks using intelligent algorithms so that users can focus on more creative edits.

“Interactions with templates matter, such that if these interactions are not supportive of a user’s natural workflow, they can hinder creativity and satisfaction with the creative process,” Khoury doctoral student Farnaz Nouraei said. “I believe that a user-centered approach to building creativity support tools … can help us identify the various types of users in terms of their approach to the creative process and develop inclusive interaction schemes that help everyone feel creative while still enjoying the productivity gains from using these tools.”

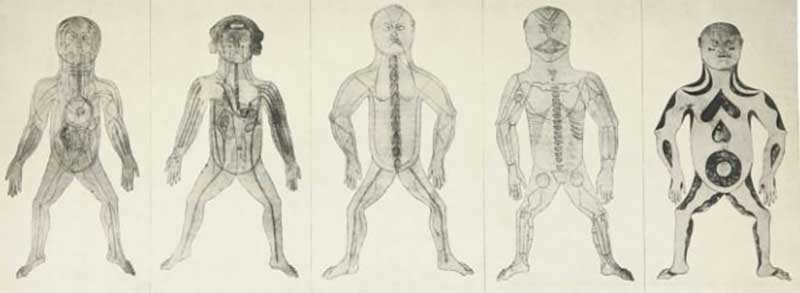

28) When the Body Became Data: Historical Data Cultures and Anatomical Illustration

Michael Correll, Laura Garrison

Our species has tried to picture what’s going on in our bodies for thousands of years. The millions of visualizations we’ve created in that time have happened within a wide range of data cultures — ways that data, knowledge, and information are conceptualized, collected, structured and shared. As a result, the historical record is filled with an array of different pictures of human bodies that reflect the time and culture in which they were created, just like our modern, “objective” visualizations do.

To explore and reflect on those visualizations, the researchers compiled historical vignettes about how anatomical illustrations have varied throughout history. For example, medical diagrams of all kinds leave out the specifics of irrelevant systems to avoid visual clutter and highlight the diagram’s rhetorical aims. Taoist concepts of the body focus on the flow of various energies like yin, yang, and qi, so muscular or skeletal systems were often left out of 15th century Chinese diagrams. Early Greek culture valued physical vitality as a sign of divinity, so anatomical diagrams offered detailed depictions of the muscular and skeletal systems but had little specificity regarding things like circulatory rhythms.

“Somebody drawing the results of a candlelit autopsy on a possibly illegally acquired cadaver for a vellum manuscript is performing a fundamentally different design activity than somebody making an interactive visualization of volumetric imaging data for a virtual reality headset. The fact that both designers might be talking about the same anatomical structures is almost beside the point,” Correll said. “The resulting visualizations are just going to look different, be used differently, and be connected to a host of different world views.”

This paper reminds us that these anatomical visualizations reflect our historical and cultural positioning, and the history from which that position developed. The paper is also intended to encourage medical visualization creators to consider the rhetorical implications of their choices and what argument their drawing makes about human bodies, as well as the ethical context in which those choices take place.

The Khoury Network: Be in the know

Subscribe now to our monthly newsletter for the latest stories and achievements of our students and faculty