Inside the Spring 2024 Khoury Research Apprenticeship Showcase

Author: Attrayee Chakraborty

Date: 06.06.24

The Khoury Research Apprenticeship Showcase’s Boston edition took place on April 10, with 24 master’s students presenting the original research they conducted under their faculty advisers. Students who participate in the program receive a stipend and are supported with regular academic advising.

Click on a student’s name to learn about their work, or simply read on.

Kaushik Boora: A Research Platform for Autonomous Vehicles

Kaushik Boora believes that autonomous driving robots hold immense potential across diverse sectors such as logistics, healthcare, and home assistance. His project focuses on advancing these robots by creating a system that navigates indoor environments autonomously, and leverages ROS2 and tools like ROS2_control, Teleop twist keyboard, Gazebo, SLAM, and Nav2 to do so. Boora chose these tools to facilitate seamless communication between software components, precise actuator control, manual operation during development, and realistic simulations.

“We used a mix of these tools to position the robot exactly in the space we wanted to,” Boora says. “2D LIDAR technology could provide the robot’s environment map, while NAV2 Stack computed the optimal course of action.”

The team successfully generated a map within the simulation environment and implemented robot movement, validating their approach. They intend to study the vulnerabilities of autonomous vehicles in the future.

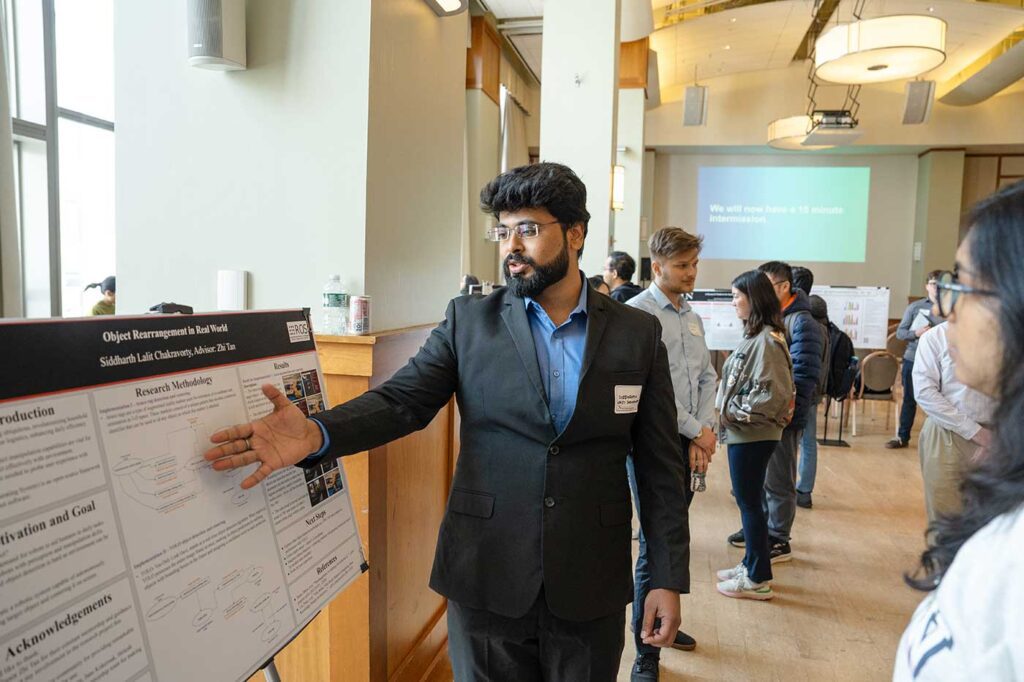

Siddharth Lalit Chakravorty: Object Rearrangement in Real World

Siddharth Lalit Chakravorty worked with the People and Robot Collaborative Systems (PARCS) Lab to create a robotic system capable of autonomously identifying a target object and centering it on the robot’s screen. The goal was to develop robots with increased perception and manipulation skills, with hopes of aiding humans in daily tasks.

“There were two implementations undertaken to complete this — an Aruco tag and YOLO,” Chakravorty says.

An ArUco tag is a fiducial marker which helps the robot get coordinates in 3D space, while You-Only-Look-Once (YOLO) centering was implemented as a real-time object detection algorithm. YOLO helps the robot identify target objects by providing coordinates and can be used to determine whether the robot picked up the object it intended to.

“Using these tools, the robot knows how much to pan its vision and tilt itself so that the object being picked up is in the center of the screen,” Chakravorty says.

Next, the team intends to explore other object detection techniques such as OWL-ViT, then compare their performance with YOLO. The team also intends to use depth sensors to get a better idea of how the robot can get the target object’s Z-coordinate for better performance in 3D space.

Alex Crystal: Enabling Culturally Aware Large Multi-Modal Models

Crystal worked with Saiph Savage to build culturally aware algorithms, which can adjust their outputs or behaviors based on factors such as the user’s language, geographic location, ethnicity, religion, or cultural context.

“When we tested models with GPT-4, we found problems in itemization and inaccuracies,” Crystal says, citing an example in which GPT-4 vision was distracted by plates and forks when presented with an image of food, and so failed to label the food properly amid the surrounding items. “To fix this, we are designing QuickCulturalSync, an annotation tool that enables us to create datasets that are culturally aware. The models are aimed to be built with more accurately labelled data from a ‘bottom-up’ approach.”

Savage’s lab is in the initial phases of creating these training datasets. In the future, the researchers intend to design metrics to evaluate language models’ cultural awareness, and to create culturally adaptive interfaces for data labeling of objects.

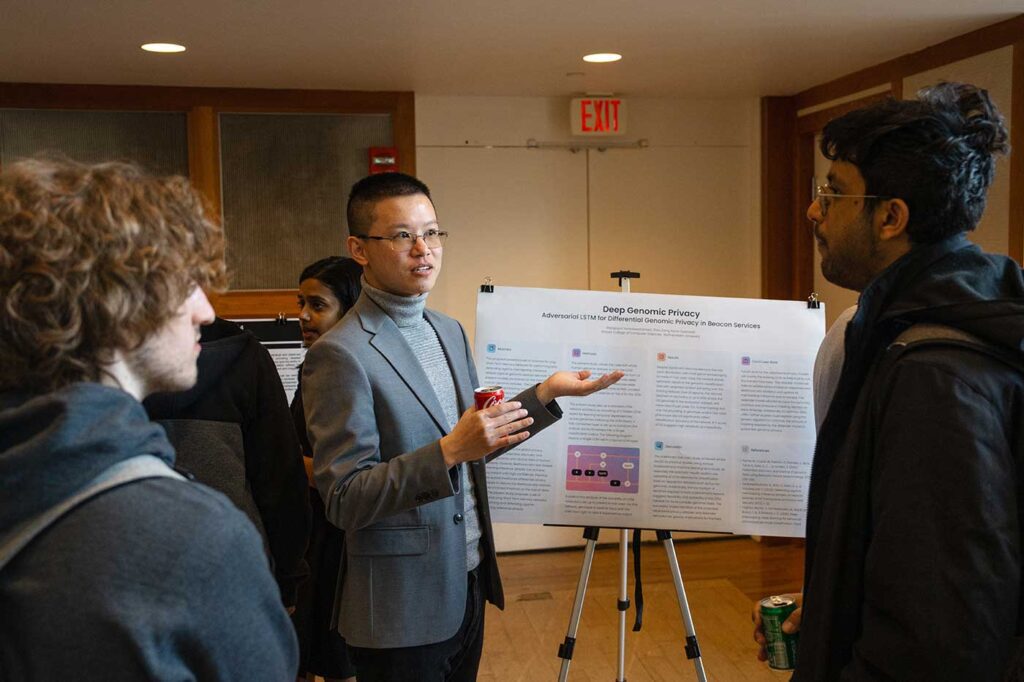

Zhou Fang: Deep Genomic Privacy

Under the supervision of Rajagopal Venkatesaramani, Fang studied adversarial long short-term memory (LSTM) networks, namely how they can prevent membership inference attacks against the beacon protocol — the global standard for the federated discovery and sharing of human genomic data.

“It’s been discovered that likelihood ratio test-based privacy attacks can infer about genome memberships in the summary statistics,” Fang says. “Existing methods to mitigate membership inference attacks use traditional differential privacy techniques to procedurally add noise to a given data set at the cost of data utility.”

Fang’s study proposes a set of adversarial LSTM networks to flexibly and adaptively add noise to guarantee genomic privacy while minimizing data utility loss.

“As a preliminary proof of concept to study of the suitability of LSTM to genomic data, we set up an LSTM network to predict hair color phenotype from sequential genotype data,” Fang says. “As expected, LSTM networks show great compatibility for classification tasks involving sequential data, with comparable accuracy of roughly up to 80% for the hair color classification task.”

Going forward, Fang and his team will modify their hair color model to function as both an adversarial attacker and defender model, with potential implications for biotech, federated learning, and differential privacy.

Lauryn Fluellen: Technical Design of the Fediverse: A Retrospective Discourse Analysis

Fluellen, under the supervision of Michael Ann DeVito, presented her study on the technical design of the fediverse.

The fediverse is a decentralized social media network comprising interconnected servers and communities, offering an alternative to large, centralized platforms like Facebook. Each community has its own rules and moderation, and users can join different communities depending on their interests. This decentralized model prioritizes user control, privacy, and interoperability, fostering a vibrant ecosystem of interconnected communities, especially for marginalized people.

“There is ambiguity around the decision-making processes surrounding critical features of the fediverse,” Fluellen says. “Using a created dataset of information on the fediverse, we tried to study how to ensure compliance with privacy laws in a decentralized environment.”

Fluellen has been studying risks and uncertainties within the fediverse to promote user safety and well-being. Through a retrospective analysis, Fluellen gained deeper insights into fediverse governance and identified potential improvements in deletion, blocking, and privacy features.

“We found that decisions regarding bug fixes often lack structured processes, leaving user data and privacy at risk,” Fluellen says. “Unlike centralized platforms where user satisfaction is prioritized, the fediverse may advise dissatisfied users to seek alternatives, reflecting the autonomy of developers.”

Fluellen’s team intends to provide actionable recommendations and standardized protocols for enhancing user experience and platform functionality within the fediverse. In the future, they also intend to investigate the implications of decentralized development on user trust, privacy, and platform sustainability, seeking opportunities for improvement and innovation.

Vidya Ganesh: Multi-modal Fact Checking of Out-of-Context Images via GPT4

Ganesh worked with Huaizu Jiang to explore the potential of large foundation models in fact-checking image–caption pairings.

“This is particularly important in fact-checking misleading content on social media,” Ganesh says. “Often, we find out-of-context images are commonly used to spread false narratives, and fact-checking is costly and time-consuming.”

Through integration with image recognition tools, GPT-4 can assess whether an image’s content matches its caption by understanding the objects, scenes, and activities depicted in the image. By cross-referencing with reliable web-based sources, GPT-4 can leverage its vast training data to cross-reference claims made in captions, using web search APIs to find corroborating or contradicting evidence.

“We used data from Twitter focused on verified posts from BBC journalists,” Ganesh says. “Through web scraping, we obtained all the details from the identified out-of-context posts and the posts that flagged them. We then used search engines to verify this information.”

Using that approach on chain-of-thought prompt engineering, GPT-4 performed better not just in identifying misinformation, but in showing how the information was incorrect.

Ryan Heminway: Designing Neural Networks with Evolution

Heminway, an AI master’s student, worked with Jonathan Mwaura to research the process of designing neural networks, how algorithms can automate the creation of neural networks for specific tasks, and how neural networks can be used in designing components.

“We are seeking to automate design processes by using evolutionary algorithms,” Heminway said.

Evolutionary algorithms are a class of optimization algorithms inspired by biological evolution, which mimic the process of natural selection to solve complex optimization problems. Heminway compared two algorithms — NeuroEvolution for Augmenting Topologies and Gene Expression Programming for Neural Networks — to see what decisions they made, how they translated to the neural networks they produced, and why they came up with different architectures.

Rachana Kallada Jayaraj: Automated Failure Recovery of Coordinators in a Serverless File System

Jayaraj worked with Ji-Yong Shin on serverless file systems (SLFS), which leverage serverless functions to allow for greater elastic scalability and cost-effectiveness in distributed file systems.

A distributed file system (DFS) is a data storage and management scheme that allows users and applications to access files such as PDFs, text documents, images, videos, and audio files from shared storage across any one of multiple networked servers. With data shared and stored across a cluster of servers, DFS enables users to share storage resources and data files across many machines.

“SLFS introduces a function coordinator to manage request flow, ensuring efficient load balancing and scalability,” Jayaraj says. “However, vulnerability of the coordinator is a single point of failure in routing file requests.”

Jayaraj addressed this weakness with an automated recovery mechanism that swiftly detects and restores failed coordinators, ensuring uninterrupted functionality.

“We implemented a continuous monitoring system and tested through failure recovery,” Jayaraj says. “If a coordinator unexpectedly fails, the monitoring proxy swiftly initiates the recovery process by bringing up the failed coordinator.”

Currently, the failure recovery testing has been conducted on a single-node setup, a configuration where the entire file system is hosted on one server or node. The team hopes to test on a distributed multi-node setup to validate its performance in a more realistic environment.

Vamshika Lekkala: Uncertainty-Aware Machine Learning for Exo Control

Lekkala, under the supervision of Michael Everett and Bouvé–College of Engineering Professor Max Shepherd, researched around-the-clock ankle exoskeletons which can assist or remain passive as needed. These exoskeletons are designed to aid people with ankle injuries, enhance mobility for people with neuromuscular disorders, and support elderly people looking to prevent falls and improve balance.

“Ankle exoskeletons hold promise for enhancing human mobility, yet their efficiency is often compromised by inadequate control mechanisms,” Lekkala says. “We tried to solve this using ensemble models with multiple temporal convolutional networks (TCNs) … Each TCN provides four labels, such as velocity, stance phase and so on, which can be used to estimate the next position of the ankle. Based on whether the TCNs agree or disagree with each other, we conclude whether a task is assistable or not.”

So far, Lekkala and her team have measured model performance, and will soon analyze their results.

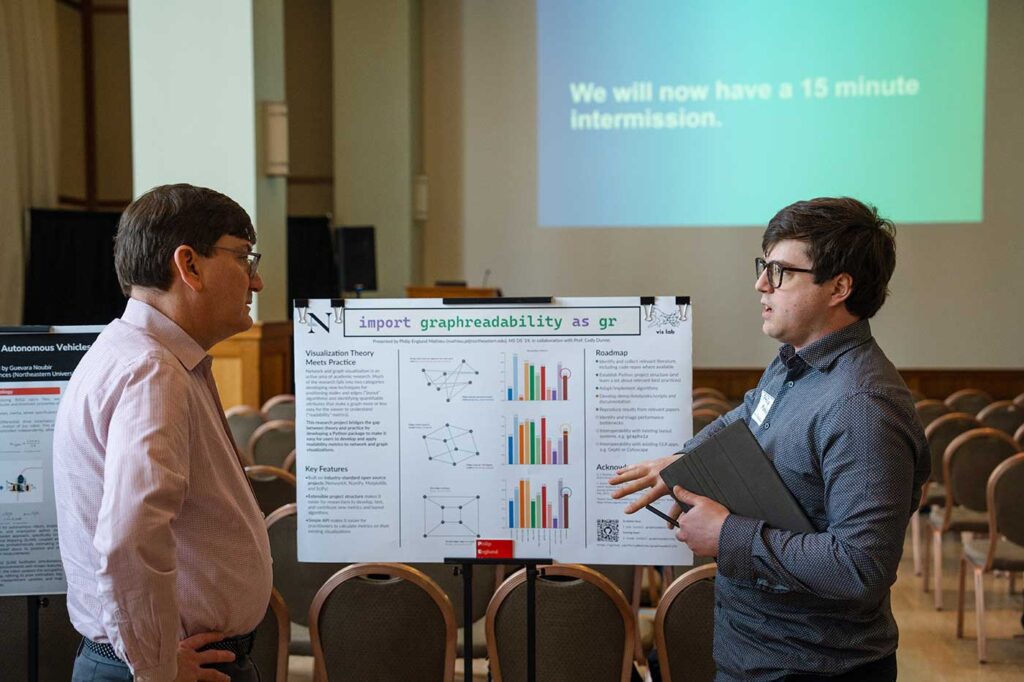

Philip Englund Mathieu: graphreadability

Mathieu collaborated with Cody Dunne and the Khoury Vis Lab on network and graph visualization. His project bridges the gap between theory and practice through “graphreadability,” a Python package that allows users to develop and apply readability metrics to network and graph visualizations.

“Graph visualizations are used by computer scientists all the time,” Mathieu says. “However, sometimes they may be difficult to interpret and easy to overlook. We want to quantify how one diagram is easier to read than the other.”

Mathieu and his team built an open-source, user-friendly Python package for researchers to test out new metrics and apply them to existing visualizations.

Divyadharshini Muruganandham: Assessing the Departure from Linearity in a Functional Network of Interictal EEG Measurement

Muruganandham worked with Wes Viles to develop a novel estimation procedure that identifies statistical associations in complex networks and quantifies relative information in the context of epilepsy to understand neural interactions.

“We aimed to model the statistical behavior of a functional brain network during seizures and enhance the understanding of different analytical methods,” Muruganandham says. “This can help in addressing the shortcomings of current diagnostic and treatment strategies in capturing intricate neural interactions.”

Muruganandham used interictal electroencephalography (IEEG) datasets from OpenNeuro for her analysis. IEEG is a multivariate time series of electrical activity in the brain, as recorded by subdural or depth electrodes.

Using equations and statistical methods — such as linear models with symmetry and auto-encoders — Muruganandham devised computational methods for better understanding seizures.

“The reason we chose models with symmetry is that our brain is also symmetrical,” Muruganandham says. “When two points on either side of the brain interact, the communication is also bidirectional. Our results with these models have shown less error in use because of this.”

The team intends to further explore the idea of coupling the equations, with prediction accuracy and model interpretability as their goals.

Bruce Neri: Impact of StoryMap on Physical Activity Engagement, A Study on Low Socioeconomic Status Families

Neri worked on StoryMap, an app meant for increasing physical activity in adults.

“Adults are not usually able to work out as much as they did when they were younger, especially those who have kids,” Neri says. “When parents don’t work out, kids don’t end up having much physical activity either. We employed StoryMap engage parents with kids through physical activity.”

Neri and his team employed StoryMap over five weeks with 16 low-socioeconomic-status families. Neri translated subject recruitment documents from English to Spanish for wider bilingual accessibility.

“I enhanced the operational efficiency and inclusivity of the research study,” Neri says. “Through modernizing the app codebase and integration of the app with Fitbit, we could study the influence on participants’ attitudes towards physical activity … Whenever they would engage with the app, they remembered the good experiences that they had from sports and that motivated them to also do that with their kids.”

In the future, Neri and his team intend to integrate machine learning and a broader range of health metrics into StoryMap, and to further expand the app to diverse communities.

Kush Pandya: Split Processes in Computer Systems as Applied to MPI in HPC

Pandya worked with Gene Cooperman on checkpointing processes in high-performance computing. Checkpointing involves periodically saving the state of a running application so that in the event of failure, the computation doesn’t need to restart from scratch — just like a video game saving a player’s progress.

Pandya used MANA — an MPI-Agnostic Network-Agnostic transparent checkpointing tool that saves the state of a program running on multiple processors or nodes — to split an MPI library in two halves.

Annie Pates: Improving text-based search of 3D object databases

A 3D object database is a type of database management system that stores and manages 3D objects and their associated data. It is designed to handle complex data structures, such as 3D models, graphics, and multimedia content, which are difficult to store and manipulate in traditional relational databases.

Pates, under Megan Hofmann, worked to improve object labeling, allowing for more accurate searches based on corresponding segments and keywords.

“The inspiration came from my experience working with open-source models for 3D printing,” Pates says. “However, with user-generated models comes user-generated descriptions, which may be inaccurate. This makes trying to search these models using text very challenging.”

In her research, Pates worked to find the common segments in 3D mesh files and match them to correct words in their corresponding descriptions.

“For example, we can see those models with ‘tube segments’ and ‘arc segments’ often correspond to the words ‘cup’ and ‘handle,’” Pates says. “But models with only ‘tube segments’ correspond to only ‘cup.’”

From this, Pates concluded that models with only ‘tube’ segments correspond to only ‘cup’. Currently, she is refining the model based on accurately labeling specific unlabeled segments and correctly matching associated models. The team’s end goal is to use this model for 3D-printable accessible devices, such as prosthetic arms.

Ashish Pawar: Can GPT Price Stock Options?

Pawar, in collaboration with Divya Chaudhary, has been questioning how the prices of call and put options are determined when trading stocks. Options give the holder the right, but not the obligation, to buy (call option) or sell (put option) an asset at a specified “strike price” before the option’s expiration date.

“There are many ways to price an option,” Pawar says. “Some are mathematical and some can be derived using machine learning models. One widely known model is the Black-Scholes model. The question that we ask is: Can GPT price these options?”

Using five years of data, Pawar trained GPT-3.5 to create three different models for stocks such as Google and Apple. The model equaled or exceeded the Black-Scholes model in pricing out-of-the-money options for Apple stocks. For Google stocks, the trained model beat the Black-Scholes model in all categories of pricing.

Wing Sum Poon: A Digital Biometric Approach to Reducing Hospital Admissions for Underserved Older Adults with COPD

Poon, under the supervision of Holly Jimison, helped research an innovative app aimed at treating chronic obstructive pulmonary disease (COPD) by using unobtrusive monitoring and acoustic data to detect if a patient’s disease is worsening. Poon was involved in three aspects of this project: developing the mobile care app, usability testing, and conducting a feasibility plot.

“We used interactive messaging, reports, and educational materials in our app to guide users,” Poon says. “Additionally, we performed two rounds of usability testing with five subjects each.”

To detect COPD, Poon and her team used a deep learning classifier called YAMNet. The project is in a very early stage, with the team still planning to redesign the app, deploy it in a larger clinical trial, and commercialize it nationwide.

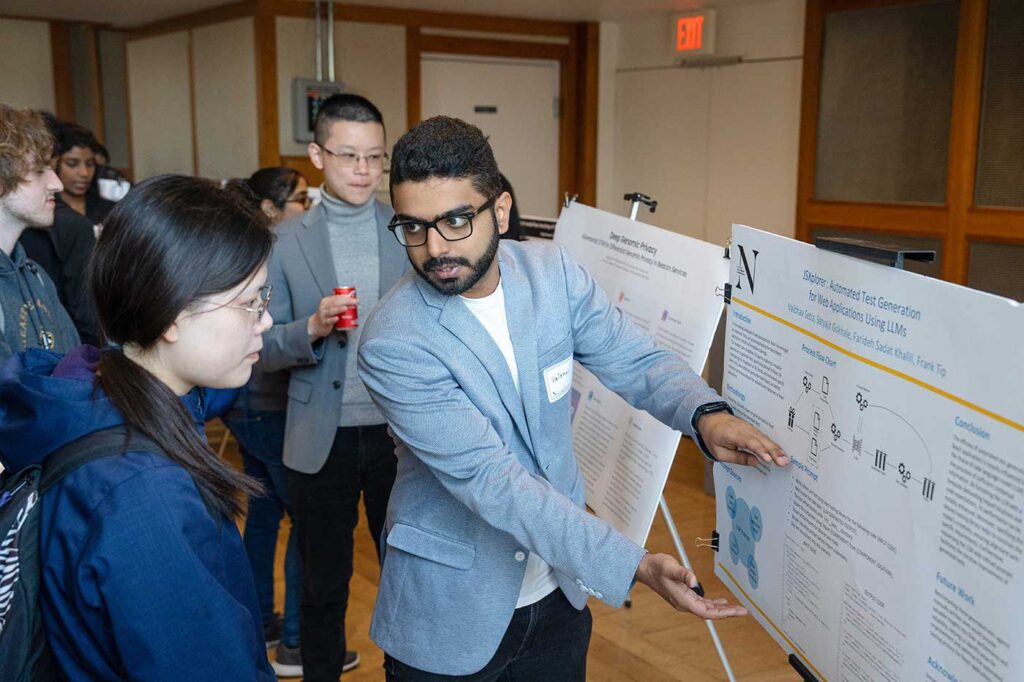

Vaibhav Setia: JSXplorer : Automated Test Generation for Web Applications using LLMs

Setia, under the supervision of Frank Tip, investigated how to generate tests for web applications using large language models (LLMs).

“Testing software for functionality is difficult, and generating automated tests are even harder, Setia says. “We extracted code from software packages, gave it to the LLM, and asked it to interpret what the code does. The LLM gave us a couple of tests, which we ran to see whether the tests cover all the edge cases or not.”

When the team found that some tests generated by the LLM were not comprehensive, they fine-tuned them in an iterative manner to make them perfect. For now, the team has identified the best prompts and uncovered some inconsistencies; going forward, they plan how to scale their efforts to more projects.

Dilshath Shaik: Harnessing EANT for Advanced Neural Networks

Shaik worked with Jonathan Mwaura to discover whether evolutionary acquisition of neural topologies (EANT) facilitates the evolutionary design of neural networks, and what factors contribute to its limited usage in the field.

“This innovative approach allows us to evolve both the structure and weights of neural networks efficiently, offering a promising avenue for advancements in reinforcement learning,” Shaik says. “This offers a great tool for optimizing the selection of algorithms, especially by incorporating genetic operations such as structural mutations and crossover, which help in evolving highly adaptable neural architectures.”

Their findings emphasize the unique capability of EANT to balance exploration of new topologies with the exploitation of effective existing designs, a process that enhances learning and adaptation in dynamic environments.

Shaik and Mwaura are aiming to broaden the technology’s application, ease its integration into mainstream neural network design practices, and address the challenges of computational efficiency and scalability inherent in EANT.

Justin Steinberg: Enhancing Multi-Modal Health Data Annotation with LLM Assistance

Steinberg, under the supervision of Jiachen Li and Varun Mishra, seeks to bridge the gap between raw sensor data and actionable health insights for older adults by assisting researchers with innovative data annotation and visualization tools.

Steinberg illuminated several challenges of sensor technology, such as data annotation of patient data, which is a complex, time-consuming process that demands accuracy. Steinberg strove to understand the annotation process, collaborative annotation with large language model (LLM) assistants, and how LLM assistants can be used for data annotation.

“LLMs can provide contextual information on raw data which can be verified by experts,” Steinberg says.

Steinberg presented his team’s workflow for LLM-assisted data labeling, which involved developing annotation guidelines, preliminary annotation, evaluating performance against expert labels, and redefining guidelines and prompts based on performance. The team tested the LLM output against human-labeled samples for final validation.

“There are still a number of challenges,” Steinberg says. “Standardizing different data formats, incorporating a human in the loop, and maintaining transparency of models are key challenges.”

Benjamin Wolff: Statistical Analysis of Mass Spectrometry Imaging (MSI) Arthritis Dataset

Fueled by his interest in statistical analysis and biological data, Wolff analyzed mass spectrometry imaging (MSI) data, focusing on knee images from people with arthritis.

MSI data includes information about bone, cartilage, and other biological tissue, and identifies compounds and molecules in different sections of the tissue. Wolff’s goal was to identify compounds that were expressed with different intensities in the arthritis group compared to the control group. To do so, he used a linear mixed effects model that considered the effects of variation in data.

“I conducted contrasts (t-tests) for 20–30 potentially significant compounds,” Wolff says. “I explored differences both between the tissues as a whole and between the cartilages of the tissues specifically.”

Yuwei (Kate) Wu: Maintaining Engagement of Older Adults with Cognitive Impairments Through SoftBank Pepper Robot

Wu, advised by Timothy Bickmore and Yunus Terzioglu, was inspired by the observation that interactive computer games and social assistive robots can sustain engagement and bolster caregiving efforts. People with mild cognitive impairments (MCIs) often face challenges such as disorientation, which can diminish their involvement in advantageous health interventions.

“Professor Bickmore’s team has emphasized a robotic agent called Pepper, which has social capabilities and has been used to support caregiving tasks,” Wu says. “For our experiments, we came up with four different engagement detection methods to evaluate how interactions with Pepper can help sustain the attention and participation of older adults in meaningful activities.”

Wu and her team combined Pepper with a tablet interface that allowed participants to play games such as tic-tac-toe. As participants played and interacted with Pepper, the researchers tracked their eye contact, monitored their interactions with the game, and gauged the physical proximity and posture of the robot when the participant won or lost. This helped them evaluate how Pepper engages with participants and how this can be used to improve care towards patients afflicted with MCI, especially older patients.

Yuanyuan Yang: Learning Galaxy Intrinsic Alignment Correlations

Yang, under the supervision of Robin Walters, explored how neural networks can help physicists advance their understanding of Intrinsic Alignment (IA), a phenomenon which contaminates weak gravitational lensing signals and inhibits scientific study of the universe’s large-scale structure.

IA analyses, traditionally modeled by analytical approaches which fail to capture alignments in the full non-linear regime, have recently turned to simulation models for more accurate descriptions. These methods, however, suffer from computational expense and would benefit from efficient modeling on GPUs.

“We propose a deep learning approach using neural networks,” Yang says. “We used cosmological parameters as input. Our model was found to capture the underlying signal as well as the overall shape of IA.”

Mao Zhang: Teaching the Graphics Pipeline Using Interactive Matlab Apps

Zhang, advised by Mike Shah, set out to build an interactive learning tool for students to learn and explore basic math concepts in computer graphics. The tool responds visually as the user interacts with shapes and displays an information board to help students understand related concepts.

“Users can interact by moving the sliders and see each transformation change along each stage of the modeling, viewing, and projection (MVP) process,” Zhang says. “The MVP process has been successful in creating a projection of 3D objects onto a 2D screen for students to visualize.”

Zhang and his team plan to further evaluate their tool with introductory computer graphics students to examine its impact on learning outcomes and expand its applications in teaching.

Jiawen Zou: ML-Driven Discovery of New Materials for Quantum Computers

Zou, under the supervision of Miguel Fuentes-Cabrera, has been working on discovering new materials for quantum supercomputers.

“Traditional density functional theory calculations are time-consuming,” Zou says. “We seek to automate the process and speed up discovery using machine learning.”

Zou and his team used structured, numerical energy values that their multilayer perceptron model could efficiently use to learn complex nonlinear relationships. By using appropriate dropout to mitigate overfitting and employing suitable regularization to constrain the model complexity, Zou and his team reduced the prediction relative error to 7%.

In their future work, the researchers plan to train autoencoder models on density of states (DOS) graphs. They also aim to integrate energy and DOS prediction networks, with the goal of creating a unified network for more efficient analysis in real-world material discovery scenarios.

The Khoury Network: Be in the know

Subscribe now to our monthly newsletter for the latest stories and achievements of our students and faculty