CS6220 Unsupervised Data Mining

HW3A Dimensionally Reduction, Supervised Classification

Make sure you check the syllabus for the due date. Please

use the notations adopted in class, even if the problem is stated

in the book using a different notation.

We are not looking for very long answers (if you find yourself

writing more than one or two pages of typed text per problem, you

are probably on the wrong track). Try to be concise; also keep in

mind that good ideas and explanations matter more than exact

details.

Submit all code files Dropbox (create folder HW1 or similar

name). Results can be pdf or txt files, including plots/tabels if

any.

"Paper" exercises: submit using Dropbox as pdf, either typed or

scanned handwritten.

DATATSET : SpamBase: emails (54-feature vectors) classified as spam/nospam

DATATSET : 20 NewsGroups : news articles

DATATSET : MNIST : 28x28 digit B/W images

DATATSET : FASHION : 28x28 B/W images

https://en.wikipedia.org/wiki/MNIST_database

http://yann.lecun.com/exdb/mnist/

https://www.kaggle.com/zalando-research/fashionmnist

PROBLEM 1: Supervised Classification Libraries: Regression, Decision Tree

6 Runs of Supervised Training / Testing : 3 datasets (MNIST, Spambase, 20NG) x 2 Classification Algorithms (L2-reg Logistic Regression, Decision Trees). You can use a library for the classification algorithms, and also can use any library/script to process data in appropriate formats.

You are required to explain/analyze the model trained in terms of features : for each of the 6 runs list the top F=30 features. For the Regression these correspond to the highest-absolute-value F coefficients; for Decision Tree they are the first F splits.

In particular for Decision Tree on 20NG, report performance for two tree sizes ( by depths of the tree, or number of leaves, or number of splits )

PROBLEM 2 : PCA library on MNIST

A) For MNIST dataset, run a PCA-library to get data on D=5 features. Rerun the classification tasks from PB1, compare testing performance with the one from PB1. Then repeat this exercise for D=20

B) Run PCA library on Spambase and repeat one of the classification algorithms. What is the smallest D (number of PCA dimensions) you need to get a comparable test result?

PROBLEM 3 : Implement PCA on MNIST

Repeat PB2 exercises on MNIST (D=5 and D=20) with your own PCA implementation. You can use any built-in library/package/API for : matrix storage/multiplication, covariance computation, eigenvalue or SVD decomposition, etc. Matlab is probably the easiest language for implementing PCA due to its excellent linear algebra support.

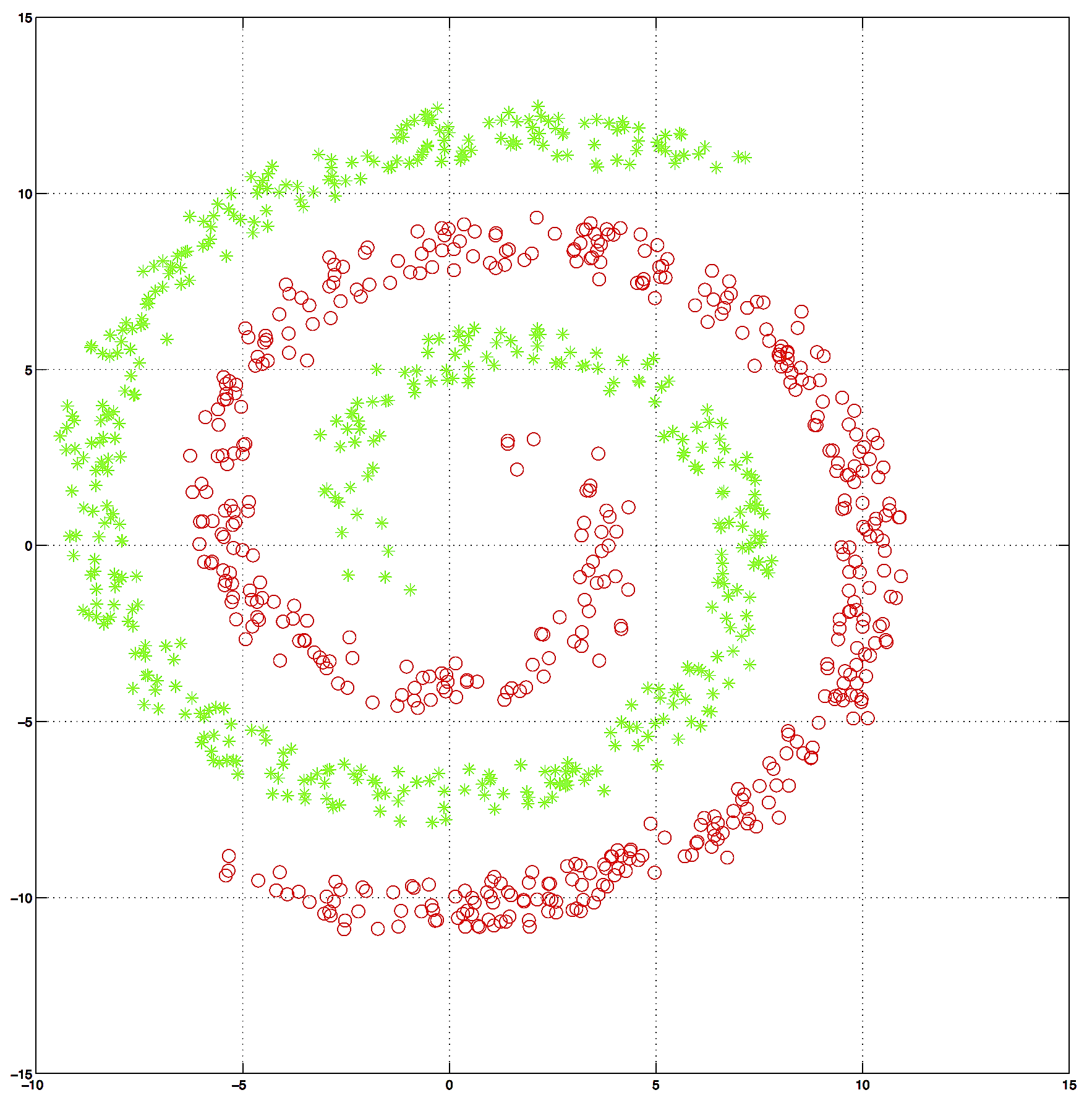

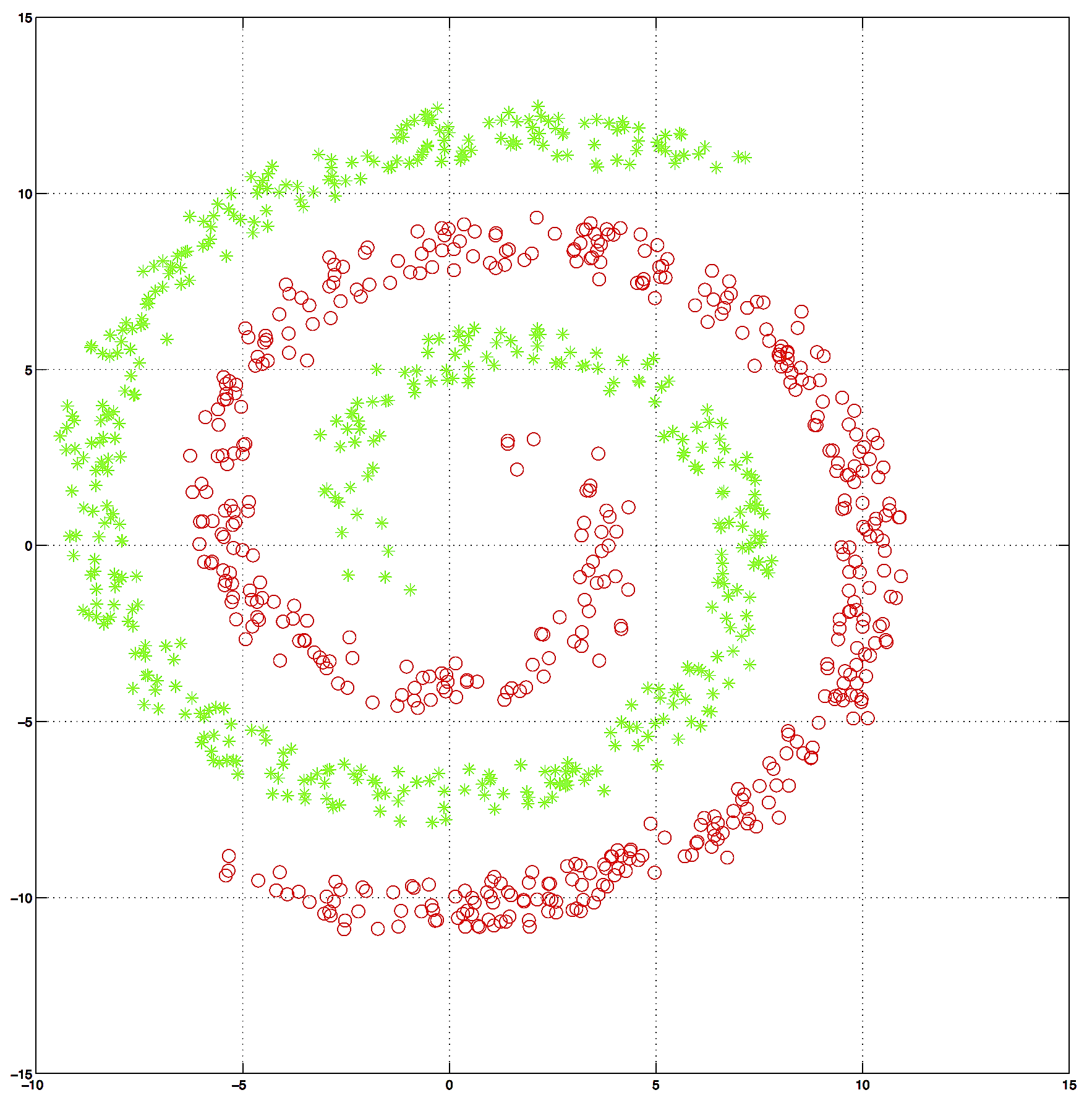

PROBLEM 4 : PCA for cluster visualization

A) Run KMeans on MNIST data (or a sample of it)

B) Run PCA on same data

C) Plot data in 3D with PCA representation with t=3 top eigen values; use shapes to to indicate truth digit label (circle, triangle, "+", stars, etc) and colors to indicate cluster ID (red blue green etc).

D) Select other 3 at random eigen values from top 20; redo the plot several times.

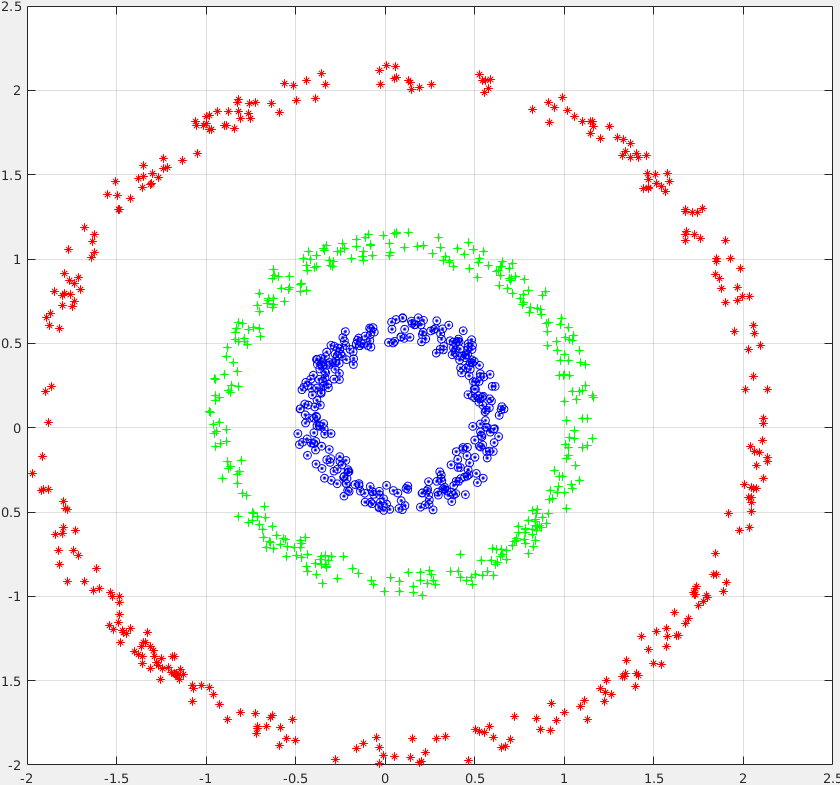

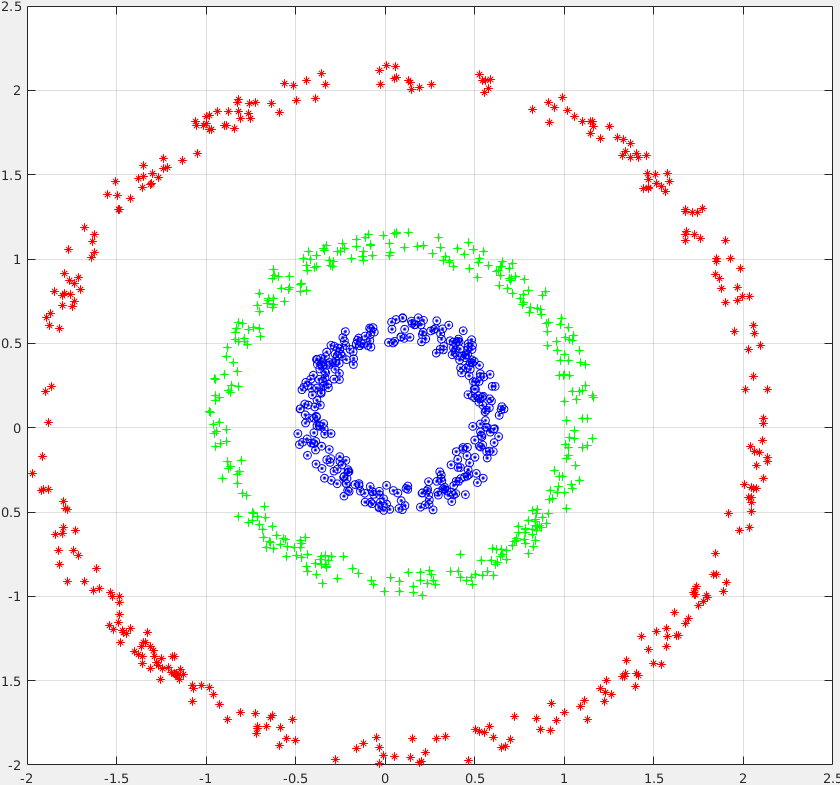

PROBLEM 5 : Implement Kernel PCA for linear regression

Dataset: 1000 2-dim datapoints TwoSpirals

Dataset: 1000 2-dim datapoints ThreeCircles

A) First, train a Linear Regression (library) and confirm that it doesnt work , i.e. it has a high classification error or high Root Mean Squared Error.

B) Run KernelPCA with Gaussian Kernel to obtain a representation of T features. For reference these steps we demoed in class (Matlab):

%get pairwise squared euclidian distance

X2 = dot(X,X,2);

DIST_euclid = bsxfun(@plus, X2, X2') - 2 * X * X';

% get a kernel matrix NxN

sigma = 3;

K = exp(-DIST_euclid/sigma);

%normalize the Kernel to correspond to zero-mean

U = ones(N)/ N ;

Kn = K - U*K -K*U + U*K*U ;

% obtain kernel eignevalues, vectors; then sort them with largest eig first

[V,D] = eig(Kn,'vector') ;

[D,sorteig] = sort(D,'descend') ;

V = V(:, sorteig);

% get the projection matrix

XG = Kn*V;

%get first 3 dimensions

X3G = XG(:,1:3);

%get first 20 dimensions

X20G = XG(:,1:20);

%get first 100 dimensions

X100G = XG(:,1:100);

C) Retrain Linear regression on the transformed D-dim data. How large D needs to be to get good performance?

PROBLEM 6 - OPTIONAL (no credit) : Implement Kernel PCA on MNIST

A) First, add Gaussian noise to MNIST images.

B) Then rerun PCA on noisy images (D=5 and D=20) and inspect visually the images obtained by PCA representation

C) Run Kernel-PCA with the RBF Kernel (D=5 and D=20) on noisy images and observe better images visually.