What is EgoPER

Characteristics

Challenges

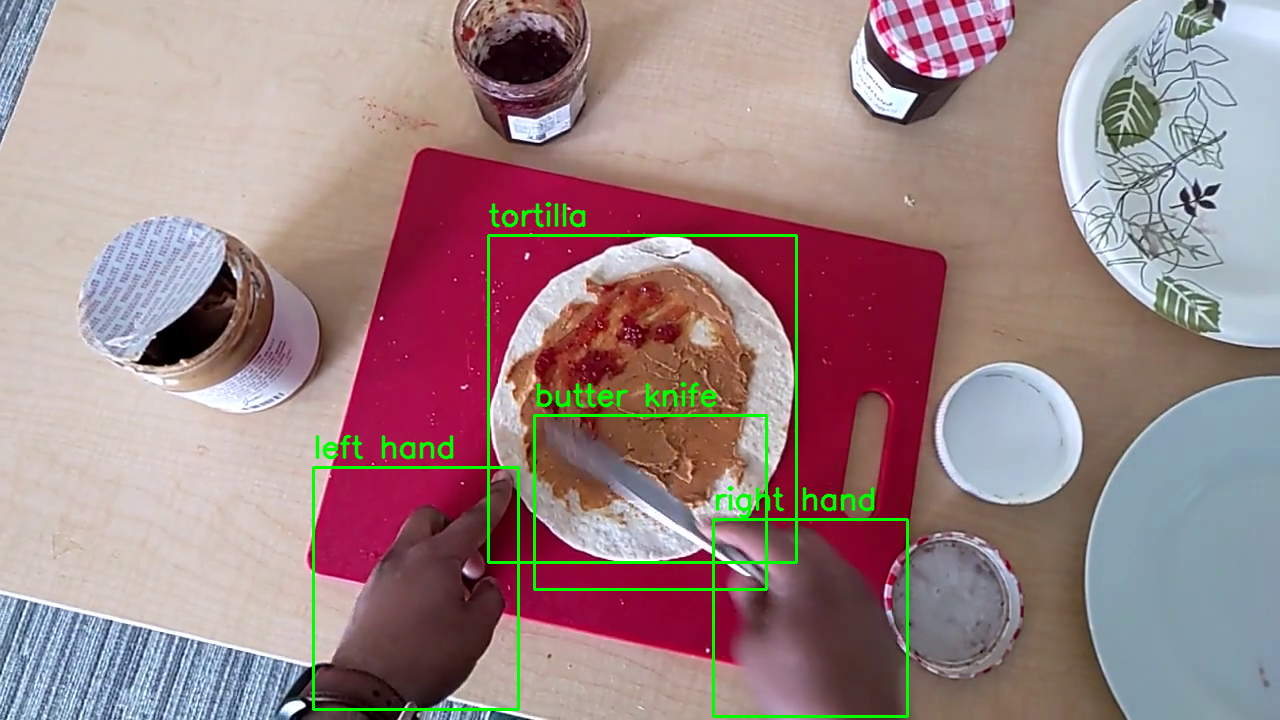

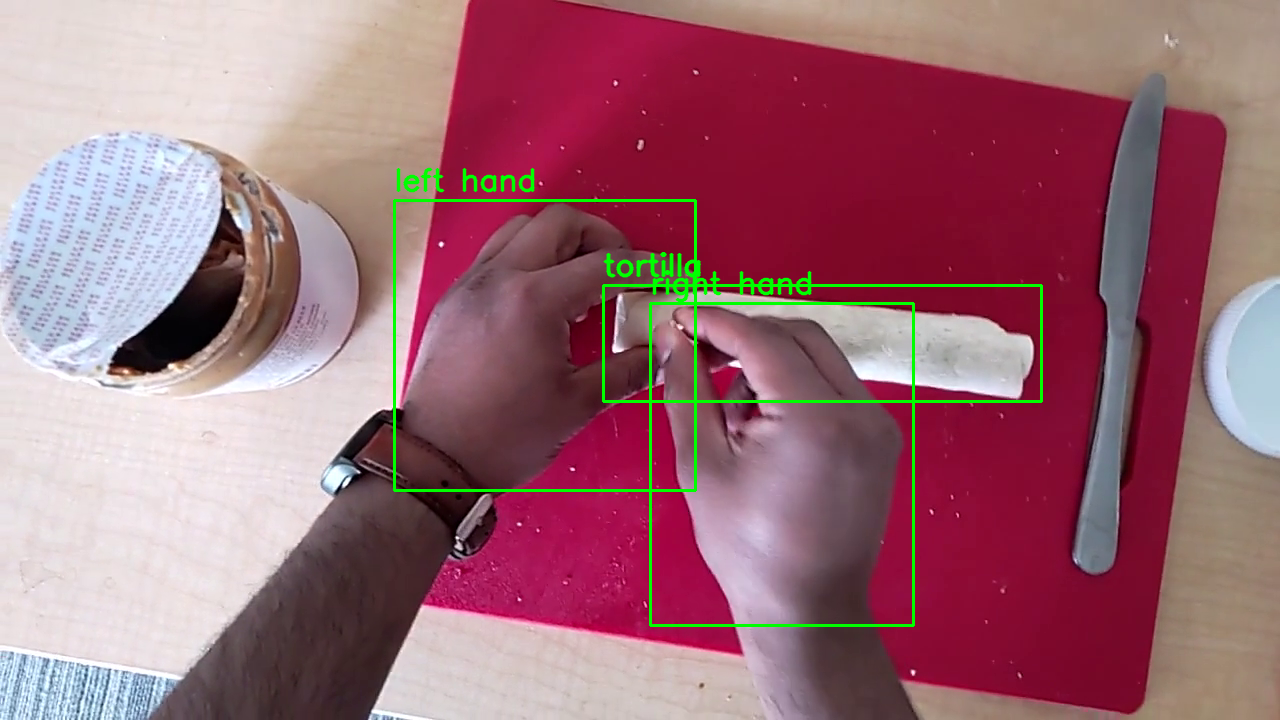

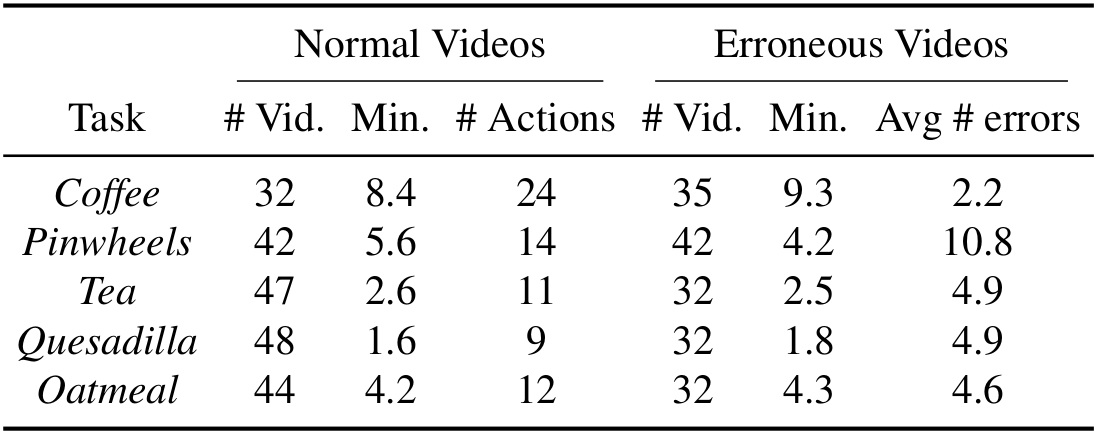

The dataset contains egocentric procedural task videos and other modalities such as audio, depth, hand tracking, etc, on 5 different cooking tasks - pinwheels, coffee, quesadilla, tea, and oatmeal.

Besides the correct/normal videos, EgoPER dataset contains erroneous/abnormal videos with 5 different categories - slip, correction, modification, addition, and omission.

- 28 Hours of Recording

- 5 Cooking Tasks

- Erroneous Videos

- 5 Error Types

- Multiple Modalities

- Frame-wise Step Labels

- Object Bounding Boxes

- Active Object Labels

- Temporal Action Segmentation

- Procedural Error Detection

- Action Recognition

- Active Object Detection